But given the questions I get from readers and friends alike, it seems there is still some mystery surrounding the latest formats. Many users don’t know just what’s required to add Ultra HD to their home entertainment systems. Today I’ll try to clear up a few things so you’ll know whether or not to make the investment.

(Image copyright LG)

Video display technology is always surrounded by confusing jargon. Manufacturers all offer similar products so they attempt to separate themselves from the competition by using unique terms to define features. A great example of this is “LED TV”. It suggests that the display has pixels made up of individual LEDs which we know is not the case. LED simply refers to the backlight. The panel is still a liquid crystal display that has changed little since first inception.

We’re seeing the same phenomenon today with the words “4K” and “Ultra HD”. Are they the same thing? Strictly speaking, they aren’t. True 4K has a horizontal resolution of 4096 pixels. Most display manufacturers misuse the term by calling their products “4K Ultra HD” when they have a resolution of 3840×2160. This is more accurately known as “Ultra HD.” The term 4K is appropriate when used to describe the Digital Cinema Initiative standard and its 4096-pixel width.

For today’s purposes, we’ll be talking about consumer displays, televisions and projectors, which offer a resolution of 3840×2160. And I’ll be referring to that standard as Ultra HD.

My first viewing of an Ultra HD display was at the CEDIA Expo in 2012. Sony was showing a spectacular 85-inch television with a native resolution of 3840×2160 and a staggering price tag of $25,000. The native content being shown was classic demo material with lots of slow pans, bright saturated color and detailed shots of faces, flowers, cityscapes and the like.

Secrets Sponsor

The first question on everyone’s lips was, “will it accept a native resolution signal?” This harkens back to the first 1080p TVs which couldn’t accept signals at full resolution. The answer from Sony was “yes, at a 30Hz refresh rate.” This was not a limitation created by the manufacturer. 2012 was the first appearance of the HDMI 2.0 standard. It was only on paper at the time; no shipping products had the new interface.

Today it’s still not a universal feature. Most Ultra HD TVs have only a single HDMI 2.0 input. The rest are still 1.4a. To accept an Ultra HD signal at 60Hz, you’ll need 2.0. Otherwise you’re limited to 30Hz. Is this a big deal? Well actually, no. Film content is still mastered at 24fps so even the latest Ultra HD Blu-rays should work fine over an HDMI 1.4 connection, right? Well that answer is not as clear so we’ll have to dive in to the details at this point.

(Image copyright VIZIO)

Ultra HD is invading the display market much like 3D did a few years ago. The first couple of years saw only premium models selling for high prices. But now it has become a commodity. In fact I just finished up a review of an excellent VIZIO 65-inch D-series panel that sells for only $1000.

So it’s a pretty good bet that before long, the only way to buy a 1080p display will be to either go small (under 40 inches) or to buy at the absolute bottom price point of a product line. You’ll have it whether you want it or not.

Secrets Sponsor

But those reading this article are likely to be a little more discriminating. You don’t want to buy your display based on information from the sales dude at Costco. So if you’re shopping for a new television and Ultra HD is at or near the top of your wishlist, there are a couple of things you should look for.

First and foremost is HDCP (High-bandwidth Digital Content Protection) version 2.2. This is an absolute must. Do not, I repeat, DO NOT, buy a display without it. If you do, the only way to get native Ultra HD content into it will be through streaming. And that’s likely to be limited to the TV’s built-in interface. External devices capable of producing an Ultra HD signal will be looking for HDCP 2.2 and if they don’t find it, surprise! Your content will be down-rezzed to 1080p. Yes, the panel will upscale to its native resolution. But if you’re going to invest in a new TV, it should accept Ultra HD signals from any compatible source.

The nice thing about ensuring HDCP 2.2 compatibility is that it goes hand-in-hand with HDMI 2.0. This is the necessary interface for an Ultra HD signal at 60Hz. As I stated earlier, HDMI 1.4 will only support 30Hz. This is fine for film content of course, but an Ultra HD Blu-ray player will only send an Ultra HD signal to (you guessed it) a display with HDCP 2.2.

So in summary, look for HDCP 2.2 and HDMI 2.0 in your new Ultra HD TV. Anything else is obsolete already.

At this writing I have seen only one line of consumer-level projectors that support Ultra HD natively and that’s the ES series from Sony. They have developed their own three-chip SXRD design with a max resolution of 4096×2160 pixels, the full DCI-P3 spec. Being a reflective imaging technology, it has the deep contrast one would expect from an LCoS variant. It won’t quite produce the super-deep blacks of the latest JVC models but that company doesn’t have true 4K either.

The least-expensive Sony 4K model is the VPL-VW350ES at $7999 and it includes HDMI 2.0 with HDCP 2.2 for full compatibility with current and upcoming Ultra HD Blu-ray players as well as Sony’s own FMP-X10 media server which also supports Ultra HD. It should be noted that firmware updates have just been released for the VPL-VW365ES ($9999) and 665ES ($14,999) that add HDR compatibility for Ultra HD Blu-ray discs. So if you have the budget, these displays will offer the most current tech.

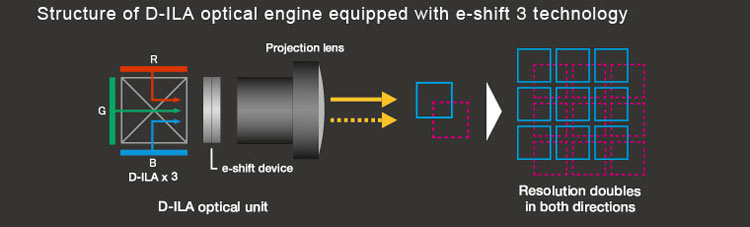

There are two other companies marketing home theater projectors that support Ultra HD but their imaging chips are only 1080p native. I’m talking about the Epson LS10000 laser model and JVC’s line of D-ILA displays. They all use a pixel shift method to create the illusion of higher resolution without actually possessing the extra pixels. Here’s how it works.

First off, both brands’ projectors run at a 120Hz refresh rate. That’s important because it allows each frame to be displayed twice. The image below comes from JVC. Their tech is called e-shift.

At left are the three imaging chips, red, green and blue. They pass light through the e-shift device which is a computer-controlled refractor. It can shift the image by a half-pixel diagonally. So each frame is first displayed natively, then shifted as shown by the graphic at the far right. Our eyes’ persistence of vision stitches the two images together into a virtual Ultra HD image. Thanks to some fancy video processing, this method does a decent job of increasing perceived resolution; mainly by eliminating the pixel gap.

Epson’s method is called 4K Enhancement and it works exactly the same way. Both companies’ products accept Ultra HD signals and support HDMI 2.0 with HDCP 2.2.

Speaking of Epson, the LS10000 is unique in another way, the inclusion of a laser light engine. Is this something that will improve image quality? I reviewed this fine projector just recently https://hometheaterhifi.com/reviews/video-display/projectors/epson-pro-cinema-ls10000-laser-projector-review/ and found it to have one of the most stunning images I’d seen to date. However, the lasers, in my opinion, do not make the image sharper or more detailed; not in the same way as a high-quality lens does for instance. The LS10000 looks great for a number of reasons, the main one being its high contrast. Epson is using a reflective chipset similar to the LCoS tech found in Sony and JVC projectors. The only difference is the use of a quartz substrate rather than silicon. In my opinion this is the principle reason for its fantastic image. Lasers enable a lot of great things like a long service life, accurate color, and wider gamuts; but I do not believe they are required for good image fidelity. A UHP lamp when combined with other high-end components and optics can produce a picture of equal quality.

So we’ve established that an Ultra HD display should, without a doubt, have HDMI 2.0 and HDCP 2.2 if you plan to make the full transition to this new format. There are really no other major considerations for TV or projector buyers to consider. All the other tenets of imaging science still apply. The best displays will have high contrast, accurate and saturated color, and high resolution. Simple. So let’s talk about sources.

When Ultra HD first appeared in 2012, the only available sources were streamed. Right off the bat, the technology hobbled itself by depending on highly-compressed content. There is far more to image quality than simply increasing resolution. Obviously the bandwidth requirement is substantial for 8.2 mega-pixels. The only way to move that over the Internet is with a lot of compression. Those extra pixels don’t mean much when they’re asked to display nasty color banding and block artifacts.

Buyers of early Ultra HD TVs from Sony could also purchase a media server with an internal hard drive. It provided a solution in the form of downloadable content that was much closer to Blu-ray quality. Of course even with a fast Internet connection you’d have to transfer your movies in advance. When I reviewed the XBR-65X900B, I also had an FMP-X10 on hand. It took about two hours to download a 45-minute documentary on air racing. The footage looked amazing and was completely demo-worthy in every way.

Today’s Ultra HD TVs almost universally include a streaming interface built right into the set. This is a great way to enjoy lots of content from Netflix, Amazon, Hulu and other providers. I’ve sampled some Ultra HD shows from Amazon and found them to vary in quality. Clips I watched from Bosch and Man In The High Castle looked amazing while the BBC show Orphan Black didn’t fare as well. So it’s pretty safe to say that streamed Ultra HD content is going to be a crapshoot for now.

So what is a videophile to do? Just as when 1080p hit the streets, the best solution always comes on a shiny disc.

We’ve seen announcements and previews of Ultra HD Blu-ray players for a while now but as of this writing the only component actually available for sale at the moment is Samsung’s UBD-K8500 at $400. It supports HDMI 2.0 and HDCP 2.2 so it should work with a similarly-equipped display. It will upscale 1080p Blu-ray content to Ultra HD as well as playing the latest UHD discs which are also just starting to appear. It also supports HDR10 which is the HDR standard used on said discs. It isn’t compatible with Dolby Vision so we’ll have to wait and see if that becomes an issue.

In April Panasonic shipped its DMP-UB900 to consumers in the UK. Hopefully it will be available over here by the time you read this. It also adds HDR capability to the mix. There’s no way to know where those specs will finally land so buying an HDR-capable player now may be a gamble.

Other manufacturers are only teasing products right now though I have it on good authority that Oppo will have a unit available before long. For those thinking about UHD Blu-ray I’ll repeat my advice from above. Make sure it has HDCP 2.2!

Of course when one spends $400 or more on a new disc player, one hopes that there will be lots of shiny discs to go with it. Well they are coming out a little at a time just like the first 1080p Blu-rays in 2006. And surprisingly they don’t sell for a huge premium. The UHD version of Deadpool is only five bucks more on Amazon than the 1080p copy.

And what of the fabled Redray player? When I went to CEDIA 2012 and 2013, all the Ultra HD demos were streamed from one of these high-end boxes. It contains a 1Tb hard drive and an Ethernet connection for downloads. Its video quality is pretty much unparalleled but there is a catch – it doesn’t support HDMI 2.0. Even though well-heeled users can add one to their rack for about $1500, it won’t be able to play the latest content and it won’t support HDR either.

There is another issue people should be aware of before getting too excited over Ultra HD. How are the Hollywood movies we all consume actually filmed? Some of us remember when the Star Wars movies were first released on Blu-ray. Episodes I-III (1999-2005) were the first major productions shot using digital cameras. And only Episode III had elements captured at 1920×1080 resolution. While this is fine for the Blu-ray we all know and love today, how will it translate to an Ultra HD re-master?

The newest chapter, The Force Awakens, was shot on a combination of digital and film cameras then transferred to a 4K master. Yet the CGI effects were rendered in 2K and up-scaled. Will this affect image quality? I certainly didn’t notice a problem when watching it in IMAX digital. So it’s hard to imagine an issue when viewing the Ultra HD Blu-ray (when it’s released) on a much smaller screen.

Many movies today are shot on either all-digital cameras or partially on film. Transferring the latter is pretty easy to do in either 4K or 8K resolution. But sometimes digital cameras, like the Arri Alexa are only shooting at 2.8K or 3.2K. At some point in post-production, the image may be upscaled to 4K to create a final print for film-equipped theaters or copied to a hard disk for digital presentations.

Another issue faced by film-makers is practical resolution. Even though a camera may have a 4K image sensor, the effective resolution can be as little as 70 percent of that. This is due to processing steps like a low-pass filter for example, that reduce resolution before the image is saved. According to one industry insider I spoke with, upcoming cameras with 8K sensors will be the first to deliver true 4K footage.

Of the aforementioned CGI effects, 2K is the norm for pretty much every studio today. The processing power required to create this resolution is quite high so imagine how much hardware one would need to create 4K renderings. When a system has to produce thousands of film frames individually, we’re talking about potential production times in days rather than hours. The only film I’m aware of with 4K rendered effects is Disney’s Tomorrowland.

For those interested in the future of Ultra HD Blu-ray, keep an eye on Sony Studios. They are the only company that currently masters everything in 4K with HDR. Ultimately it’s the latter standard that will separate the best from the rest.

So many products and technologies are marketed as “future-proof.” Many of you realize by now that this is untrue. You will never be able to purchase a technology that doesn’t become obsolete at some point in time. That being said, there are some choices available that will stave off the need for upgrades for at least a few years.

I’ve already mentioned this but it bears repeating – HDMI 2.0/HDCP 2.2. These standards are paramount to any purchase of an Ultra HD display, source or any device you plan to install in the signal path. This would include things like sound bars, receivers and the like. It is quite possible that you’ll have to replace your entire AV system to fully accommodate Ultra HD. But if you’re willing to accept the compression that comes with streaming, you can enjoy a fair amount of content right now by simply purchasing an Ultra HD TV with a good user interface.

Once you move outside the display’s built-in software things get a little more complicated. You can’t really get the wrong Ultra HD Blu-ray player right now because there’s only the single Samsung model. It’s too early to tell whether or not it become a standard-bearer but since it doesn’t include Dolby Vision support, it might be an early-adopter product.

If you don’t want to invest in shiny discs just yet, you can get downloadable content through Sony’s FMP-X10 media server. It has the necessary interface and an internal hard drive. So when you want the best possible quality, it’s only a multi-hour download away.

Receivers, soundbars and processors all seem to be coming out with the correct input and HDCP support as well. Just make sure to check those specs before pulling the trigger.

Projector fans have more limited choices. Only Sony sells a consumer line of native 4K/Ultra HD models. And since they lack any sort of built-in streaming, you’ll have to go with a new UHD Blu-ray player, media server or streaming box to explore their full potential.

The final question one should ask before making wholesale changes to their entertainment system is – will all this make my picture look better? After viewing countless demos of Ultra HD televisions and projectors, my answer is yes, a little. Regardless of screen size, you’ll see a greater difference between standard def 480i and 1080p then you will when going from 1080p to Ultra HD. I have spent a fair amount of time watching Ultra HD televisions for reviews and though I can see the effect on a 65-inch screen, it’s not something I’m immediately inspired to buy. A 1080p display with good contrast, accurate color and a sharp image will create an equal sense of immersion and suspension of disbelief.

This axiom holds true for projectors as well. While I haven’t reviewed the Sony ES-series models, I’ve had both JVC and Epson examples in my theater that use shift technology to increase resolution. While there is a visible difference, again it’s a small one. I still hold that contrast is king and if a projector came along that offered significantly more contrast than my six-year-old Anthem LTX-500, I’d buy it even if were 1080p.

Through the course of this article I’ve only mentioned HDR in passing. High Dynamic Range has a far-greater impact on image quality than any increase in pixel count will ever have. While Ultra HD is coming to all our homes eventually, the real focus should be on that technology. So I’ll wind this up with a simple recommendation: If you need a new display right now, by all means go for Ultra HD with HDMI 2.0 and HDCP 2.2. But if you aren’t in a hurry, let’s see what happens with HDR in the near-term. It’s likely that every major display manufacturer will be announcing new models at CES in January 2016. That will hopefully solidify a standard for HDR that we can all bank on.

Ultra HD is here for sure and you can enjoy it right now with a decent amount of native content. But the real upgrade will come when it’s combined with HDR. Now that will be an image worth investing in. The next year will likely included some exciting new products that take a significant leap in image fidelity. Ultra HD is just part of that leap. I’m truly excited to see what’s coming next!