Introduction

1080p, or 1080 progressive, is a very high resolution video format and screen specification. It is one of the ATSC HDTV specified formats which includes 720p, 1080i, and 1080p. If you are even casually interested in Home Theater, you no doubt have heard the term 1080p, and if so, you most likely have been misinformed about it. Common misconceptions being spread include that there is no media to carry it, that you need an enormous screen to benefit from it, and on the whole you just shouldn’t care about it. Why the industry has persisted in the charade is beyond the scope of this piece, but suffice it to say, if you don’t care about 1080p now, you will.

1080p is here, it is now, and has been for quite some time!

In order to understand 1080p, you first need a solid understanding of 1080i (1080 interlaced). Please bear with us, don’t cut to the chase, and keep on reading. Trust us, it’ll be worth it.

1080i vs. 1080p: It’s all a matter of time.

1080i is the highest resolution format of the HDTV ATSC specification as well as the recently launched HD DVD and Blu-ray media. 1080p is often quoted as being a higher resolution than 1080i, and though from a certain point of view (which we will touch on) that’s true, in the broad context it is not (1).

In a very real way, 1080i and 1080p are the same resolution in that both consist of a 1920 x 1080 raster. That is, the picture is comprised of 1080 separate horizontal ‘lines’, with 1920 samples per line (or pixels per line, depending on your point of view). In other words, both 1080i and 1080p represent an image with 1920 x 1080 unique points of data in space.

The difference between ‘i’ and ‘p’ can only be appreciated in the time domain.

In a “true” or “native” 1080i HDTV system, the temporal resolution is 60 Hz. The image is sampled, or updated if you prefer, every 1/60 of a second. As with any interlaced format though, only half the available lines are sampled, or updated, every 1/60 of a second. The capture device (say, a video camera) does not sample the entire 1920 x 1080 at one time. Rather, it samples fields. A single field consists of every other line out of the complete picture. So we have the “odds” field which has lines 1, 3, 5, 7, etc and the “evens” field which has lines 2, 4, 6, 8, etc.

So, in an interlaced system, the camera samples one field (say the “odds”), then 1/60 of a second later, it samples the opposite field (the “evens”), then 1/60 of a second later it refreshes the odds, then 1/60 of a second later the evens, and so on. The alternating set of fields of a 1080i source each make up half the image.

The shorthand for this format is 1080i60.

The subject being captured is updated every 1/60 of a second, but only half the lines are used for each update. This has one benefit and many drawbacks.

The one virtue of this format is its high subject refresh rate: Think of a sporting event where the ball is traveling fast. We get an update on its position every 1/60 of a second. That’s really good compared to film’s 24 Hz refresh rate (even IMAX HD is only 48 Hz).

The downside on an interlaced format is that the alternating fields only truly compliment each other if the subject is stationary. If it is, then the alternating fields “sum” to form a complete and continuous 1920 x 1080 picture (everything lines up perfectly between the two fields). If the subject moves though, it will be in one position for one field and another position for the next. The interlaced fields no longer compliment one another and artifacts such as jaggies, line twitter, and other visual aberrations are a normal side effect of the interlaced format.

What does all this have to do with 1080p?

1080p differs from 1080i in that the entire 1920 x 1080 raster (all of the 1080 lines side to side) is sampled and/or displayed at one time. No fields. Just full, 1920 x 1080 frames. No combing. No line twitter. Just perfect pictures. But how, if our HDTV system does not incorporate 1080p does it become at all relevant?

We’re going to show you.

First we will explain how and why 1080i must be processed as best as possible into 1080p in order to maximize the potential of today’s digital displays, including LCD and Plasma flat panel TVs, as well as LCD/DLP etc, projection systems.

Then we’ll explain how 1080p material is already here, how many of you have some in your home right now and you don’t even know it!

(1) The TV term “high definition” goes back to the 1930s, when anything higher than 30 scanning lines qualified. So, even the ATSC specification of 720p and 1080i is somewhere around the fourth step into the better world of increasing amounts of video picture information. Commercial 3,840 x 2,160 projectors are already becoming available at the cineplex, and we will probably have such displays at home within three to five years, to watch TV programs and movies in “higher definition”.

Flat Panel HDTVs and the Caveat No One Wants to Talk About

As we’ve said, 1080i60 is the highest resolution format offered by today’s mediums. Its fairly intuitive to think that simply displaying it as such will maximize the formats potential. The trouble is, with the exception of dinosaur CRTs not yet cleared from inventories, you can’t buy a TV today which is capable of displaying it!

CRT (aka “tube”) TVs are the only display technology which actually “does interlaced”. Its the only technology which can actually alternately refresh the odd and even lines of its face. In other words they are the only devices that can display a “raw” 1080i60 signal.

Well, CRT is dead! We (and countless others) heralded the death knoll of CRT years ago amidst protest and anguish, but now there is no denying it: 2007 is the year CRTs disappear and flat panels take over . . . permanently.

This is extremely relevant. Flat panel TVs (LCD, Plasma) or any fixed pixel technology (such as DLP/LCD projectors etc) have a fixed display mode, their so called “native resolution”. That is, they can only display the actual resolution of their panel (1024 x 768, 1366 x 768, and 1920 x 1080 being just a few examples). Everything else must be scaled and/or processed to that native format of the device.

More importantly, with the exception of some odd, early, and now discontinued plasma models, NO flat panel or fixed pixel display devices “do interlaced”. That is, although you can feed them an interlaced signal like 1080i60, one way or another it has to be converted, or “de-interlaced” into a progressive stream, and then scaled or mapped to the device’s native resolution, whatever that may be.

You have no choice. It’s either going to happen in the TV itself, or in the disc player, or in a processor in between, but make no mistake: if you are watching 1080i60 on anything other than a CRT, it’s being de-interlaced.

As with many things, there is a “right” and “wrong” way to do it.

The wrong way, which of course happens to be the cheap way from a processing and cost perspective, is to simply scale each 540 line field to the native resolution of the display. That means that whether your TV is 720 lines, 768 lines, 1024 lines, or even one of the “Full HD” 1080 line models, if it de-interlaces this way, you are only seeing a picture which is 540 lines strong. Not what you paid for, is it?

Remember we said that if the picture is not moving, fields sum together to form a complete 1920 x 1080 picture?

The right way to process 1080i is to de-interlace it to 1080p (regardless of what the TV’s native resolution is) using motion adaptive de-interlacing. This is a process which involves detecting which areas of the picture are moving and which ones are not, and then combining fields in the non-moving areas while interpolating the moving ones (filling in the spaces between the alternating lines with average, in between values) . If you have a 1080p display (which actually displays 1080p without cropping and re-scaling), you’re done, because the result is a 1080p signal. If you have a TV of any other resolution, it’s then just a matter of scaling the 1080p signal to whatever the native resolution of the device is.

So even though you might only have a 720 line device, that device needs to be able to handle 1080p (at least inside the display after performing de-interlacing in order to maximize its potential when viewing a 1080i source.

Bet the sales person didn’t mention that when he sold you that shiny new TV, did he?

Let’s look at some illustrations:

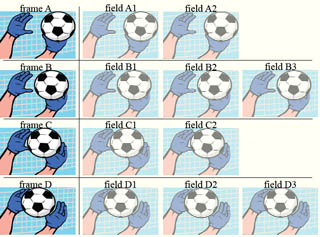

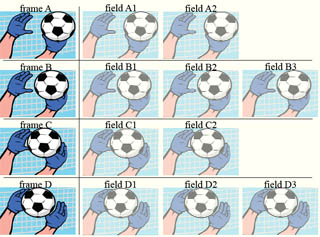

| If this were a scene shot at 1080i, and displayed at 1080i, it would look like this. But today’s digital TV’s cannot do this. The signal must be de-interlaced. |  |

| If we de-interlace it the WRONG way, it would look like this.

The entire scene is reduced to 540 lines worth of resolution. Hint: look at the hands. If you display this on a 1366×768 TV (a common resolution right now), you will be wasting 1/3 of the resolution you paid for! |

|

| If we de-interlace it the RIGHT way though, to 1080p, it would look like this.

Only the areas in motion are reduced in detail. The rest remains at the full 1080 line resolution. Though you need a full 1920 x 1080 TV to maximize the detail present, on a lesser TV, say a 1366 x 768 model, you will still realize the device’s full potential. |

|

Still wonder if you should care about 1080p?

Native 1080p Material: A Hidden Reality

The amazing thing is that the whole 1080i vs. 1080p argument is more than just analogous to the 480i NTSC vs. 480p Progressive Scan DVD of yesteryear, so the groundwork for an understanding is already in place. The principles and math are all the same, only now at a higher resolution. If one understands why Progressive Scan DVD players even exist, then you should already be able to understand how and why 1080p not only exists, but is already ubiquitous.

We said that 1080p is the entire 1920 x 1080 raster sampled and/or displayed at one time. No fields. Just full, 1920 x 1080 frames. No jaggies. No line twitter. Just perfect pictures. The question is, at what temporal resolution? If it were captured with the same 60 Hz temporal resolution of 1080i60, it would indeed be well beyond the scope of today’s HDTV transmission system as well as the new HD disc formats.

1080p exists today as a 24 frame-per-second format. The shorthand for this format is 1080p24. But if there is no medium to carry 1080p24 why should we care? We care for the same reason we cared about 480p Progressive Scan DVD: Because a p24 signal can be perfectly “folded” into an i60 carrier(2).

Most of the HDTV material you could tune into tonight falls into one of two categories: either the material was shot with a digital camera at 1080p24, or it was shot on 35mm film and transferred to this very same 1080p24 digital format. With the exception of some sports and some other “live” shows, everything from sitcoms to dramas, and of course all movies, fall into this 1080p24 realm.

So how do we get our hands on this 1080p24 if the TV signals and discs are all 1080i60?

To find out we need to understand the transfer of 1080p24 to 1080i60 (which incidentally follows the exact same principal used to convert 24fps movies to yesteryear’s i60 NTSC TV system for decades).

Lets consider a sequence of 4 frames. The first frame of the p24 source gets “cut” into two fields, the odds and the evens again. Each field contains exactly half of the original frame. But we can’t carry on like that because we’d end up with 48 fields every second, not 60. For this reason we simply “double up” on one field every other frame.

In other words, the second frame of our sequence is still cut into two fields, but we repeat its first field. The third frame is cut into two fields, as is the fourth, but again we repeat its first field. So we end up with a 2-3 pattern in the fields.

The photo below illustrates 2-3 telecine. Click on it to see a larger version.

Field B3 is a duplicate of B1, and D3 is a duplicate of D1.

So we get 10 fields from 4 original frames, or 60 fields from 24 frames every second.

To “reconstitute” the 1080p24 source, it is a relatively simple matter of weaving together the fields which came from each frame (and discarding the redundant ones).

The photo below illustrates reverse 2-3 telecine. Click on it to see a larger version.

It’s that simple, with one little caveat: digital displays like LCDs and plasmas don’t “operate” at 24 Hz. They refresh the image on their face at a rate of 60 Hz. So now that we have 1080p24 “reconstituted” as it were, we need to convert it to 1080p60. To do that, we use the same 2-3 cadence. That is, we show the first frame twice, the second we show three times, the third we show twice, and the fourth is shown three times. So from 24 frames each second, we get 60.

Some of you who are more conversant with the whole progressive scan DVD realm are probably already balking at this, citing the trouble we ran into there with regards to putting 24p on DVD and the bumps in the road with getting it back out. Those problems are fortunately for the most part a relic of the past. The issue in the DVD era was that films were first transferred to interlaced video. Often they were manipulated or even edited in that format on equipment, oblivious to the 2-3 cadence within, which would then break that cadence. We would then feed that potentially imperfect interlaced signal to a DVD video encoder which had to “detect” the film cadence within, more often than not with less than perfect results.

In this HD Digital era we are either shooting 1080p24 digital or we are transferring film to 1080p24. There is no interlaced intermediary. When it comes time to convert it to 1080i60 for transmission or storage on disc, we are feeding a perfect digital p24 stream to the encoder which turns out a 1080i60 signal with, for all intents and purposes, a “perfect” 1080p24 buried within. All it takes is correct video processing at our end (the high definition DVD player and/or display) to realize it.

(2) You might have heard of a slight variation on this, known as Progressive Split Frame. It was a way for Sony to get their legacy gear, such as D5 tape, to handle 1080p24. It is essentially a 1080i48 signal which is carrying a 1080p24 source, consisting of a simple 2-2 cadence (as opposed to the 3-2 cadence being carried by 1080i60). While there have been a couple of projectors able to handle this format natively, it is at this time of little concern to consumers.

But Do You Really Need 1080p?

Well, the first thing to come to terms with is, that, as we’ve pointed out, there is an abundance of 1080p24 material out there, encoded into 1080i60 format. If you want to view it at its full potential, you need not only a device capable of displaying it, a so called 1080line TV, but the ability to actually de-interlace it properly.

Some will argue that if you are seated far away and/or the screen is not enormous, one won’t “appreciate” the full detail of 1920 x 1080 (as compared to lower resolution TVs). Well, if you look at a 27″ 480i TV from 20 feet away, you could make the same argument. We could also make the argument that most people don’t appreciate, or even know of, reasonably good video quality to begin with. The strongest argument for that is to look at the quantity vs. quality of channels available from your satellite or cable provider in standard definition digital format vs. a good DVD in the same format, or even a standard definition terrestrial broadcast with a reasonably good signal. Even the most massive compression artifacts are apparently acceptable enough to most viewers such that most broadcast content providers fill up bandwidth with hundreds of programs (and maximize compression to do it) with little complaint from their subscribers.

In that realm, if that’s your baseline, then yes, the 1080p vs. 1366 x 768, or whatever your number, is more of a feel good numbers game. But, that’s not us, and if you’re reading this, we’re betting that’s not you either.

The point is, if you want to view the inherently 1080p24 content which is out there (and even native 1080i content) with maximum resolution (and we maintain that an enthusiast who sets up their viewing environment to get the most out of it can see the difference), you need a display capable of 1080p that keeps the signal in a 1080 line format from input to display surface.

Now, if we want to get into the evils of scaling, we could say that anything other than 720 x 480 displays are a compromise when watching standard definition DVD content, which is indeed true. However, this requires some kind of practical sacrifice.

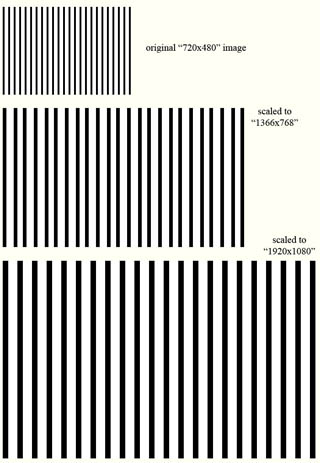

To illustrate this, we are going to use simple vertical line patterns. We’ve zoomed them up to make them easily viewable on your computer screen. They are of course only 1/10th the full raster, but they make the same point (if you imagine each pattern stacked 10 x 10 to fill a display, you’ll get the whole picture).

Our ‘original’ image is black lines, one pixel wide, with white space two pixels wide, between them. We’re going to scale that up first. We will actually do it two ways. First, we’ll do it the cheap way, where the scalar simply replicates data to fill in the spaces. Secondly, we’ll do it the better way, which involves filtering and interpolating data so that each new pixel value is a best approximate guessed value of what it would be if it were re-sampled from the original image (but it can’t be.)

In the below picture, the cheap way that simply replicates existing data to fill in the ‘extra pixels’ the smallest set of vertical lines is the original 720 x 480, then we show what happens to that when scaled to 1366 x 768 (next largest) and then scaled to 1920 x 1080 (largest) respectively.

The artifacts are not immediately apparent, but notice that in the 1366 x 768 scaled from the 720 x 480 pattern, all of the vertical lines are not the same width, nor is the spacing of white versus black the original 2:1 ratio. Similarly, on the 1920 x 1080 pattern scaled from the 720 x 480 pattern, while the width of the black lines happens to be constant, the 2:1 ratio of white space to black line is not.

Click on the photo below to see a larger, animated version. You may see a small square in the bottom right hand corner. If so, you need to click on that square to see the photo at full size.

Are 1080p Scaling Artifacts Worth Worrying About?

Is this a subtle difference not worth worrying about? Not really. Consider if we only had two lines, and they moved across the screen. They would vary in width, and vary in distance to each other, shimmering like an artificially sharp mirage.

In the next example, shown below, the ‘original’ image is the same, but the scaling method is more sophisticated, akin to what you could expect from better scaling algorithms. With the filtering/interpolating, as opposed to just doubling, the location errors are reduced (lines remain more or less evenly spread), though it’s interesting to note that if you look at each line, it’s different, in terms of where the darkest part actually is, since it needs to clump the ‘peak’ of dark to the nearest pixel location, regardless of where it should be.

The filtering and interpolation greatly reduces the obviousness of location errors, because it softens the edges of the line to do it, and averages the perceived center by weighting the degree of black in the smaller lines that contribute to the larger perceived line.

This is, certainly a better way to do it, but it must necessarily soften the edge, and as such, reduces the contrast of the detail as well. Instead of full black or white, we get variations in between, i.e., a lot of gray, and more relevantly, very little, if any, completely black or white.

Click on the photo below to see a larger, animated version. You may see a small square in the bottom right hand corner. If so, you need to click on that square to see the photo at full size.

While the 480p native pattern is crisp and maintains full contrast, the scaled up versions aren’t. Now, we could say that much of the edges that are so crisp in the 480p native pattern aren’t actual video data, but rather the pixel structure itself, and that is quite indeed true. However, what cannot be argued is that some degree of detail is in fact lost, in terms of contrast amplitude, AND that the characters of the lines will change, albeit more subtly, with horizontal movement.

This is most especially true with the 768p scaled image, where lines look visibly different, and even uneven, from line to line, illustrating that when scaling up, more resolution can be of pretty significant advantage in the preservation of fine detail, even with low resolution sources.

The pattern scaled up to the 1080p equivalent actually isn’t too bad. Given what 1080p allows with a 1080p signal, we’ll gladly take it.

It is interesting to note, though, that in both cases, higher resolution output image has less obvious artifacts, suggesting that if you were going to watch standard definition content on a high-definition display, it makes a case not only for the highest resolution display you can get, but also very good scaling algorithms that do the best job possible minimizing the visibility of these inherent scaling artifacts, while sacrificing as little detail as possible.

Don’t get us wrong. Well-scaled standard definition content can look darn fantastic on a high definition display. We just wanted to point out that there’s a compromise inherent to the ‘upgrade.’

One of the easiest, Do It Yourself examples of scaling up poorly is simply feeding an LCD monitor, that’s say 1280 x 1024, a computer desk top set to 800 x 600. The LCD has more than enough resolution, so to speak, but the text is fuzzy, icons are fuzzy, so everything’s off. In fact, if the pixel phase and clock of the input signal are not set correctly, either by auto-calibration within the monitor, or manually in the setup menu, to map the pixel values from the scan line information, you can get similar effects even with the native input rate, in which some areas have clear text, but it gets fuzzy in others.

So, should we all just revert to native 480p displays? Hardly. The future is 1080p, and 480p is in the beginning of a gradual obsolescence. If you’re going to pick an optimized display for a resolution, go for the new standard, which will be 1080.

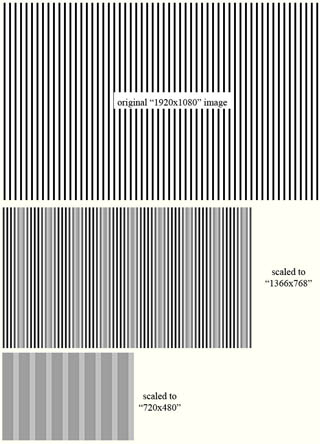

Now, there’s the argument that you can simply scale down the 1080p signal to standard definition, and if you can’t see the pixel structure (i.e., if the screen is small or you are seated far away), it won’t make any difference.

We say, “Bunk.”

Below is the inverse of the above patterns, starting this time with 1920 x 1080, and then illustrating what happens when that gets scaled down to 1366 x 768 and 720 x 480 output respectively.

Click on the photo to see a larger, animated version. You may see a small square in the bottom right hand corner. If so, you need to click on that square to see the photo at full size.

Notice that when you scale down the fine detail, you get moiré patterns developing in the vertical lines. When scaled down to 1366 x 768, as the lines move across the screen, the new pixel locations fall in and out of phase with the original image values, and you alternate between sets of relatively clear line structures and gray areas. In other words, you create wider visual structures where the original image was merely fine texture. This is QUITE obvious, even if you can’t see detail on the image at the level of a single pixel.

With the 720 x 480 output image, you get a similar deal, but without any fine detail, and with the same artifacts of wide areas of shading that, in the original image, simply don’t exist. Now, you can take the argument that the 1920 x 1080 pattern is in such fine detail, that it doesn’t apply to real world images. Oh, really? Has anyone ever considered that 1920 x 1080, the highest ATSC resolution HD format, is a mere 2 megapixels? Is somebody going to actually propose that the grille of a truck in the distance will neither move nor have closely spaced lines in the image? Hmmm?

Now, we will grant detractors of this example that many scaling algorithms will do a better job than performed in the example when it comes to trading off picture detail for lack of visible artifacts, but the fundamentals remain.

See why talking about 1080p is so important?

Conclusions About 1080p

Well, the assumed purpose of us on the web is to collect and pass on information – facts and opinions. In fact, 1080p does matter. We all need it, so in our opinion, you should be concerned. This is our attempt to convey that message.

Of course, you don’t need to have a 1080p display to have a great image. In fact, we’re happy to concede that in most cases, with most material, there are many variables, starting from basic calibration, the environment (your room), yada yada, that are far more important than having a real 1080p display. In fact, most of us don’t own a 1080p display for reasons of price and the move of the technology curve, first generation issues, etc. But, the point remains, if we narrow down the issue to a single parameter, that of resolution, aside from possible future displays that are integer multiples of 1920 x 1080 (serving to diminish pixel structure and improve the performance of scaled image output from lower resolution formats), 1080p is king, period.

Note: There are a number of corporations who have licensed the use of our technical articles in teaching staff courses. Their license extends through the current article. For corporations not licensed, you must contact our office to obtain a license before using this article or any of our other articles in conducting staff courses or for any other commercial purpose. All Secrets material is copyrighted.