Everyone thought that this would be the standard HDTV for at least the next 20 years, like standard TVs (SD – 4:3 Standard Definition – 720 x 480 USA) had been in our homes for nearly half a century. We all assumed that 1920 x 1080i would be the eventual only HDTV resolution, with 1280 x 720p tossed aside.

Wrong ! !

Now, high definition means many things, specifically, more formats that we have toes.

The 1920 x 1080i format quickly became the standard high definition format with satellite and cable TV, but the number of HD channels was limited, and it was mostly movies and specialty programs. National broadcast studios took their time because it was very expensive to convert their video cameras, control room electronics, and over-the-air broadcast equipment to HD. So, for a very long time, the evening news was still in SD (Standard Definition – 4:3 Old TVs – 720 x 480i).

It took years before we could watch a football game without at least one of the TV cameras being standard definition that was up-sampled to 1080i. It has only been in the last couple of years that the football games use cameras that are all native 1920 x 1080. And, that is with high definition having been established nearly 20 years ago.

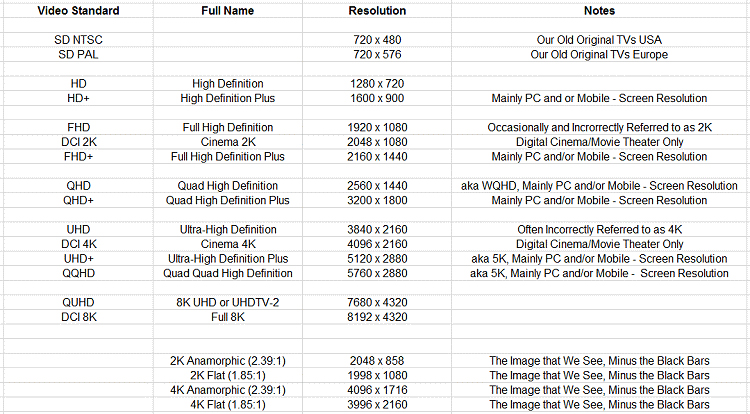

UHD (3840 x 2160) emerged about 2 years ago, and at Christmas time in 2016, there were news images that showed UHD TVs flying off the shelves at stores across the nation. We were all set for the latest and greatest, the wrongly named 4K TVs (many companies list UHD TVs as 4K, but 4K actually refers to the video cameras that studios use to shoot most movies: 4096 x 2160 (see the table shown below). Also, even if movies are shot on film, they are scanned into the studio computers at 4096 x 2160 instead of 3840 x 2160).

So, without further delay, here is a table of the various resolution formats that exist. Note that some of them are native video resolutions, while others are computer and mobile screen resolutions.

Yes, indeed, that new “4K” TV that you just bought is already out of date. The latest and greatest is actually 7680 x 4320 (it will be wrongly called 8K, which is actually 8192 x 4320), and which is already available in Japan. 8K is, like 4K, the movie studio version.

Why do the movie studios use 2048 instead of 1920, 4096 instead of 3840, and 8192 instead of 7680? Well, the TV industry did not consult with Hollywood when creating the various high definition formats. The bottom line is that, for the home versions of movies shot in high definition, and for all of the high definition formats, we lose 6.25% of the horizontal picture. Shades of Pan & Scan in the old days when we lost about 50% of the horizontal image when we watched a wide screen movie on a standard TV ! !

Studios could convert the 4096 x 2160 theater version to 3840 x 2160 for home theater, but that would involve resampling the pixels, which could reduce sharpness.

What can we do about this? Well, just go with the flow. Accept the fact that all the formats exist, and don’t panic. UHD is about 2 years old, and there are still no UHD channels on the major US satellite and cable TV carriers. UHD TV is limited to programs streamed from Netflix, but the bit rate is only 17 Mbps (Megabits per second), and UHD really needs 100 Mbps to have the same high quality images as Blu-ray movie discs at 25 Mbps. It is unlikely that many UHD TV channels will appear on satellite and cable, because they take up four times the bandwidth as HD channels do, and the spectrum is already filled with hundreds of channels. There is no space for UHD. QUHD (7680 x 4320) would take up the bandwidth of sixteen HD channels ! !

For the time being, UHD will be limited to UHD Blu-ray movie discs, which are becoming available (they are marketed as “4K”, but they are 3840 x 2160, not 4096 x 2160). Note that many of them are “Fake 4K”, meaning that they are actually 1920 x 1080 up-sampled to 3840 x 2160. To add insult to injury, even the “Real 4K” (native) 3840 x 2160 movies (4096 x 2160 at the studio) mostly use 1920 x 1080 for the digital special effects (VFX).

UHD TVs are also marketed as “4K” even though they are 3840 x 2160, so I suppose the 4K label will just include both resolutions, and consumers will know that their home UHD movies are 3840 x 2160, and the theater movies are 4096 x 2160. Or, maybe they won’t know and won’t care. I fall into the category of knowing, but not caring.

I have purchased a large (75” diagonal) UHD TV and will just buy the “real” (native) 3840 x 2160 Blu-ray UHD movie discs, especially now that some of my favorites, such as The Guns of Navarone and Lawrence of Arabia in UHD, have been announced.

But, there is also a slight improvement in the sharpness of HD programs, since the 1920 x 1080 is being up-sampled to 3840 x 2160, which includes algorithms to improve detail. So, even if you don’t watch UHD movie discs that are native 3840 x 2160, you will see a higher quality image even with HD Blu-ray discs (1920 x 1080). I don’t know (yet) if HD programs on satellite and cable will look sharper, because there is so much compression in the video signal, and thus, a lot of artifacts.

I am not sure that 7680 x 4320 (“8K”) will make it to the USA consumer. Perhaps it will, but the resolution is so high, you would need a 10-foot screen to see the difference between “8K’ and “4K”. And, with Hollywood probably still rendering the VFX at 1920 x 1080, what is the point?

Acknowledgments: The author wishes to thank Stacey Spears and Brian Florian for their help in putting together a table that summarizes the various formats. Also, some of the information in the table, as well as the figure of a bayer color sensor pattern, were gathered from Wikipedia.