Do you ever think about the process that studios go through to create those recordings?

I have wondered about this for a long, long time, and I decided to find out how complex the procedure is. Well, it is very complex, and it is obvious that a lot of very talented recording engineers have contributed to developing the methods.

The purpose of this article is not to be a guide to methods of studio recording but to illustrate how many options there are in the process so that, as consumers, we understand how much hard work has gone into that process.

There is a lot to see here, so let’s get to it.

A list of the Procedural Steps in a Recording Studio Session

1. Planning the Session

- Objectives: Determine the purpose of the recording (e.g., music, podcast, voiceover).

- Schedule: Allocate time for setup, recording, and troubleshooting. Ensure you have enough buffer time.

- Materials: Prepare lyrics, scripts, or reference tracks. Share them with participants in advance.

- Checklist: Ensure all equipment and accessories (cables, adapters, etc.) are ready.

2. Setting Up

Equipment

- Microphones:

- Choose based on the needs (e.g., condenser mics for vocals, dynamic mics for instruments).

- Position correctly to capture sound effectively (e.g., cardioid mics point toward the sound source).

- Audio Interface – ADC/DAC:

- Connect the microphones and instruments to the computer.

- Ensure that the interface is compatible with the recording software.

- Software:

- Install a Digital Audio Workstation (DAW) like Pro Tools, Cubase, Logic Pro, Ableton Live, or PreSonus.

- Ensure software is updated and ready to use.

- Headphones/Monitors:

- Use closed-back headphones for isolation.

- Position studio monitors in an equilateral triangle setup with one’s ears.

- Pop Filter:

- Attach it to the microphone stand to reduce plosive sounds.

- Acoustic Treatment:

- Add foam panels, bass traps, or diffusers to reduce reflections and noise.

Cable Management

- Organize cables to avoid tripping hazards or interference.

- XLR cables for microphones and TRS cables for instruments.

3. Testing

- Levels: Set gain levels on the audio interface so that input signals are clear without distortion.

- Monitoring: Test headphones and monitor speakers for accurate sound output.

- Recording Test: Record a short clip to check for noise, distortion, or latency issues.

4. Optimizing the Recording Room(s)

- Noise: Ensure the room is quiet. Turn off fans, AC, and other noisy equipment.

- Lighting: Set comfortable lighting to help participants focus and feel relaxed.

- Comfort: Provide seating, water, and breaks for performers.

5. The Session

- Warm-Up: Allow artists to warm up their voices or instruments.

- Guide Artists: Provide feedback during recording to get the best performance.

- Take Notes: Record multiple takes and note the best ones for editing.

- Save Frequently: Back up your work regularly to avoid data loss.

6. Post-Session

- Organize: Label and save files systematically for easy access during editing.

- Backup: Store recordings on an external drive or cloud storage.

- Plan Next Steps: Discuss mixing and mastering timelines, if applicable.

Here are the details of setting up the recording console:

1. Prepare the Workspace

- Choose a suitable location: Ensure the recording environment is acoustically treated to minimize unwanted noise and reflections.

- Organize equipment: Arrange cables, microphones, headphones, and monitors for easy access.

2. Power and Connections

- Power the console: Connect the power supply to the digital console and plug it into a stable power source with surge protection.

- Connect peripherals: Attach essential devices such as:

- Microphones (via XLR inputs)

- Instruments (via TRS or DI inputs)

- Monitors and headphones (via outputs)

3. Audio Interface and Connectivity

- Connect to a computer or DAW:

- Use USB, FireWire, Thunderbolt, or Dante, depending on your console and computer compatibility.

- Install any required drivers or software for the console.

- Sync clock: Ensure your console and other digital devices use the same sample rate to avoid synchronization issues.

4. Configure Input and Output Routing

- Assign inputs: Map microphones and instruments to the correct channels on the console.

- Set outputs: Route the audio to headphones, monitors, or external devices.

- Check signal flow: Ensure the signal flows from the inputs through processing, routing, and outputs as expected.

5. Adjust Preamp Levels

- Set gain (see also notes on gain vs. volume below):

- Adjust the gain knobs on the console for each input, microphone, or line, to achieve a strong signal without clipping.

- Monitor levels using the console’s meters.

6. Set Up Channel Processing

- Apply basic processing:

- Engage high-pass filters to cut low-frequency noise.

- Adjust EQ settings for clarity.

- Add compression or gating as needed.

- Insert effects:

- Set up reverb, delay, or other effects if required for the recording.

7. Create Monitor Mixes

- Set headphone mixes: Create custom monitor mixes for performers using auxiliary sends.

- Set control room monitoring: Ensure the mix in the control room is optimal for accurate monitoring.

8. Test and Troubleshoot

- Conduct a test recording:

- Record a short test segment to confirm everything is functioning correctly.

- Check for noise: Listen for hums, buzzes, or other unwanted noise.

- Address latency issues:

- Optimize buffer size and latency settings in your DAW or console software.

9. Save Settings

- Store console presets: Save input/output routing, channel settings, and effects as a preset if your console allows.

- Document setup: Take notes or pictures of physical connections for future reference.

10. Final Preparations

- Check performer comfort: Ensure all microphones and headphone levels are comfortable for performers.

- Monitor levels: Keep an eye on input and output meters during the session to avoid clipping.

NOTES: Gain vs. Volume

The terms gain and volume are related to audio, but they serve different purposes and are adjusted at different points in the signal chain. Here’s the breakdown:

1. Gain:

- What it does: Gain controls the input level of a signal. It determines how much amplification is applied to the audio signal when it first enters a device (e.g., a microphone preamp, mixer, or guitar amplifier).

- Where it’s applied: Gain is applied at the beginning of the signal chain, before any processing or amplification.

- Purpose: Gain ensures the input signal is strong enough to work effectively with the audio equipment without introducing noise or distortion.

- Think of it as: The sensitivity of the system to the incoming signal.

- Impact on sound: Too low a gain can result in a weak signal with a poor signal-to-noise ratio, while too high a gain can lead to clipping or distortion as the signal exceeds the system’s capacity.

2. Volume:

- What it does: Volume controls the output level of a signal. It adjusts how loud the sound is coming out of the speakers, headphones, or other outputs.

- Where it’s applied: Volume is adjusted later in the signal chain, after the signal has been processed or amplified.

- Purpose: Volume lets you control how loud you hear the final sound.

- Think of it as: The loudness knob for the listener.

- Impact on sound: Unlike gain, increasing volume won’t distort the signal unless the output device is being overdriven (e.g., speakers maxing out).

Key Differences:

| Aspect | Gain | Volume |

|---|---|---|

| Position in Chain | Input level (early in the chain) | Output level (later in the chain) |

| Purpose | Signal strength for processing | Loudness for the listener |

| Effect on Signal | Affects tone and potential distortion | Affects perceived loudness |

| Adjustment Goal | Optimize signal-to-noise ratio | Adjust listening level |

Secrets Sponsor

Analogy:

Imagine you’re filling a glass with water:

- Gain is how much water (signal) flows into the system (the glass). If you pour too little, the glass is empty (weak signal). If you pour too fast, it overflows (distortion).

- Volume is how much of that water is poured out for you to drink (how loud it’s delivered to the listener).

Both are critical but serve different roles. Trim is another term, and it is the fine-adjustment of the gain.

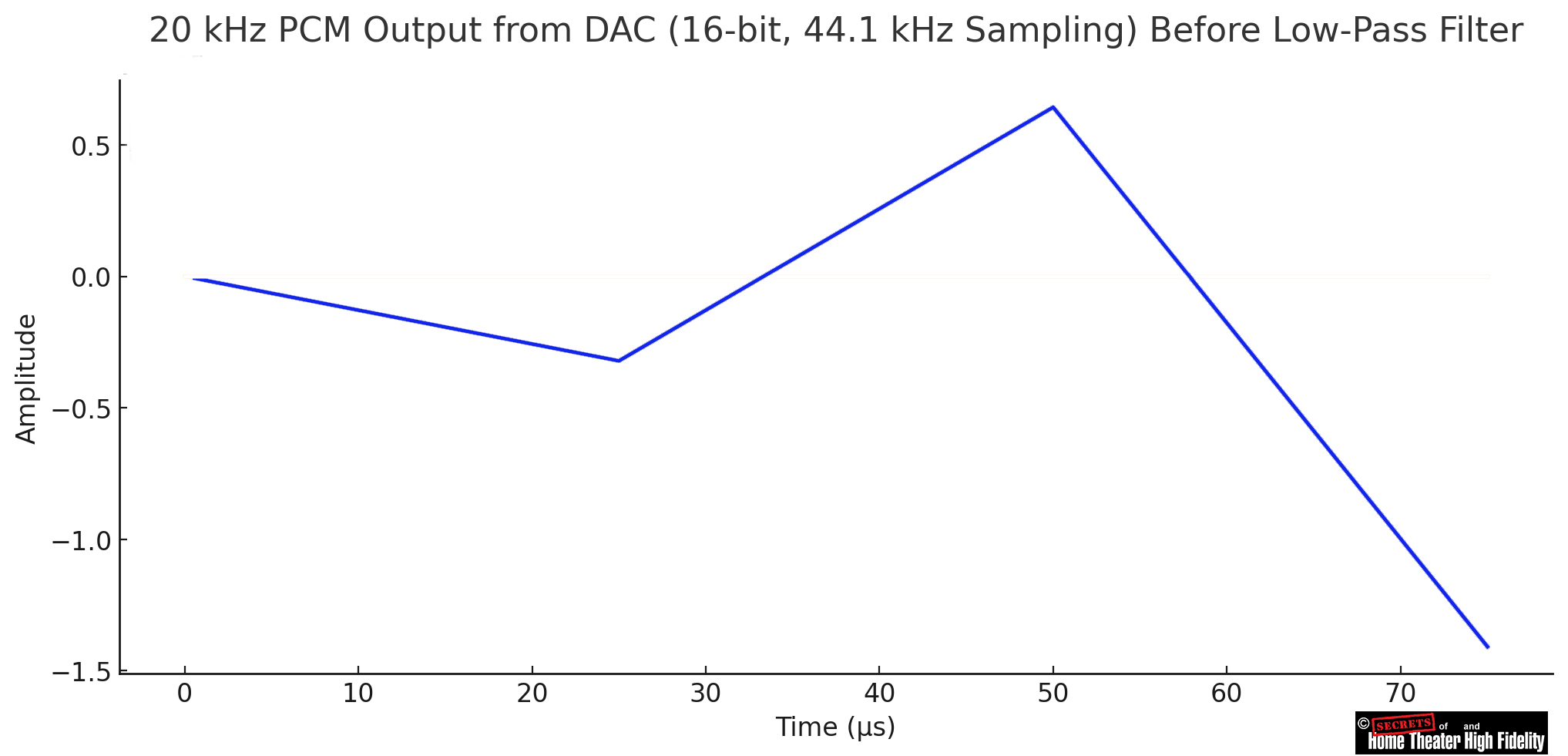

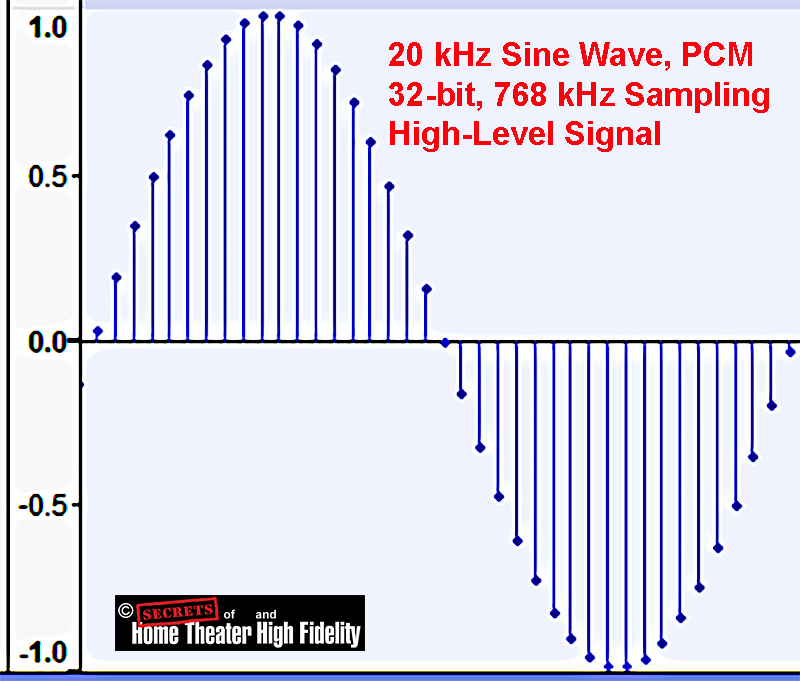

The ADC (Analog-to-Digital Converter) has to be calibrated.

1. Understand the Studio’s Calibration Standards

- Decide on the reference level you want to use. A common standard is +4 dBu, which corresponds to 0 VU on analog equipment. +4 dBU is 1.228 Volts.

- Understand the digital reference level (e.g., -18 dBFS) that corresponds to the chosen analog reference. This defines the headroom before clipping in the digital domain.

Most recording studios aim to keep the maximum recording level at around -6dBFS or lower, especially for digital recordings. Here’s why:

2. Headroom for Processing

- Keeping peaks below -6dBFS leaves room for later processing (EQ, compression, limiting, etc.) without the risk of digital clipping.

- Mastering engineers prefer some headroom to work with, so recordings are not already at or near 0dBFS.

3. Avoiding Digital Clipping

- Digital systems hard clip at 0dBFS, which results in unpleasant distortion.

- Aiming for peaks at -6dBFS to -10dBFS ensures a clean signal without accidental clipping.

4. Consistency Across Tracks

- Recording at moderate levels prevents variations in dynamics, which helps in gain staging throughout the mixing process.

- Uniform levels make it easier to blend multiple tracks without sudden volume jumps.

5. Analog Gear & Converters Sound Better at Moderate Levels

- Many high-end analog preamps, compressors, and AD converters perform best when signals are not pushed too hot.

- Some gear introduces unwanted saturation or harmonic distortion when driven too hard.

Typical Recording Level Guidelines

- Peaks: -6dBFS to -10dBFS

- Average Levels (RMS/LUFS): – 18dBFS to -12dBFS

- Final Mix/Master: Leaves headroom for mastering (peaks around -3dBFS before final limiting).

6. Gather Necessary Equipment

- Calibration Tone Generator: Many mixing consoles and DAWs have built-in tone generators; otherwise, use an external device.

- Reference Signal: This is typically a 1 kHz sine wave at a known level (e.g., +4 dBu).

- Measurement Tools: Use an oscilloscope, multimeter, or calibrated meters in your DAW.

7. Verify Analog Signal Path

- Check the analog signal chain leading into the ADC for proper connectivity and settings.

- Disable or bypass any dynamic processing (e.g., EQ, compressors) to avoid altering the reference signal.

8. Send a Reference Signal

- Generate a 1 kHz sine wave at the chosen analog reference level (e.g., +4 dBu).

- Route the signal through the studio’s signal path and into the ADC input.

9. Measure the Digital Signal

- Open your DAW or ADC control software.

- Confirm the average incoming signal level in the digital domain corresponds to the chosen calibration point (e.g., -18 dBFS for +4 dBu).

10. Adjust ADC Settings

- If the digital level does not match the expected value:

- Adjust the input gain of the ADC.

- Verify the calibration trim pots or software settings (if available).

- Ensure that the digital reading matches the expected value consistently across all channels.

11. Check Across All Channels

- Repeat the procedure for all ADC channels.

- Ensure consistent calibration across multiple inputs.

12. Test with Real-World Signals

- Record a test session to confirm that the calibration works under normal studio conditions. Make sure signal peaks for each track are no larger than minus 6 dBFS as adding tracks together and adding special effects during mixing and mastering will increase the total voltage.

- Ensure there’s no clipping or distortion and that the noise floor is acceptable.

13. Document Calibration Settings

- Record the calibration settings for future reference, including the reference level, digital level, and any specific adjustments made.

14. Maintain Regular Calibration

- Repeat the calibration periodically or whenever the studio setup changes, such as adding new hardware or moving equipment.

DACs (Digital-to-Analog Converters) that are involved in the recording session also have to be calibrated.

1. Set Reference Levels:

-

-

- Use test tones (e.g., 1 kHz sine wave at -18 dBFS or another standard level) generated by your DAW or a test tone generator.

- Measure the output of the DAC using a reliable measurement tool, such as an audio analyzer or a voltmeter.

-

2. Match Output Levels:

-

-

- Adjust the DAC’s output level to match the reference level. For example, if -18 dBFS is your reference, the output voltage might need to be set to 1.23V RMS, depending on the system. (+4 dBu is 1.228 Volts.)

-

3. Check Frequency Response:

-

-

- Ensure the DAC outputs a flat frequency response across the audible spectrum. Use frequency sweeps and analysis tools to verify this.

-

4. Confirm Stereo Balance:

-

-

- Use test tones to check that the left and right channels output equal levels. Adjust if necessary.

-

5. Room Calibration:

-

-

- If the DAC feeds studio monitors, it’s essential to calibrate the entire monitoring chain, including the DAC, amplifier, and speakers. This can be done with tools like pink noise and SPL meters.

-

6. Regular Maintenance:

-

-

- Repeat the calibration periodically to account for drift or changes in the equipment over time.

-

Notes

-

-

- High-End DACs: Professional-grade DACs are typically well-calibrated out of the box, but you should still verify their performance in your specific studio setup.

- Calibration Software: Some DACs come with software tools for calibration and testing.

- Environment: Perform calibration in the same acoustic environment as your mixing/mastering to avoid introducing room-related errors.

-

For the recording, studios employ a combination of well-known techniques and specialty methods to achieve high-quality recordings. Here are some commonly used and lesser-known techniques:

Recording Techniques

Layering Tracks

-

-

-

- Recording multiple takes of the same instrument or vocals and layering them together for a richer, fuller sound. Subtle variations add texture and depth.

-

-

Layering is a powerful and widely used technique in digital recording studios. It involves recording multiple takes or tracks of the same or complementary parts and combining them to create a richer, fuller sound. Below are some detailed insights and variations of layering techniques:

1. Vocal Layering

-

-

- Doubling: Record the same vocal part multiple times and layer them together. Slight differences in timing and tone create a natural chorus effect.

- Example: Pop and rock vocals often use doubling to add strength to the lead vocal.

- Harmonies: Layering harmonized vocal parts to enhance the melodic structure and add emotional impact.

- Whisper Tracks: Record a whisper version of the vocal and blend it subtly to add texture and air.

- Doubling: Record the same vocal part multiple times and layer them together. Slight differences in timing and tone create a natural chorus effect.

-

2. Instrument Layering

-

-

- Guitars:

- Rhythm Guitar: Record the same rhythm part with different guitars, amps, or pickup settings to create a wide stereo image. Panning one track left and the other right adds depth.

- Acoustic and Electric Blend: Layering acoustic and electric guitars can add a mix of warmth and brightness.

- Doubled Takes: Record the same riff multiple times with slight timing variations for a natural feel.

- Drums:

- Kick Drum Layering: Combine a recorded kick with a triggered sample for a blend of natural tone and punch.

- Snare Samples: Layer recorded snare hits with processed samples to add impact or depth.

- Overheads and Room Mics: Blend close mic recordings with room mic layers for a natural ambiance.

- Bass:

- Layer a clean DI signal with an amp track or a distorted version for clarity and weight.

- Guitars:

-

3. Electronic and Synth Layering

-

-

- Synths: Combine multiple synth sounds to create a more complex and textured tone.

- Example: Blend a soft pad with a sharp lead synth to create a sound that cuts through but remains lush.

- Bass Synths: Layer sub-bass with higher-register synths to maintain low-end power while adding clarity.

- Synths: Combine multiple synth sounds to create a more complex and textured tone.

-

4. Percussion and Sound Design Layering

-

-

- Percussion: Blend natural and electronic percussion for hybrid textures.

- Example: Layer an electronic clap with a real handclap for warmth and realism.

- Sound Effects: Layer sound effects to create unique textures (e.g., layering multiple explosion sounds for a cinematic impact).

- Percussion: Blend natural and electronic percussion for hybrid textures.

-

5. Subtle Variations in Layering

-

-

- Timing Offsets: Slightly delay or advance a duplicate layer to create a slap-back or doubling effect.

- Pitch Shifts: Slightly detune one layer to add a chorus-like shimmer.

- Dynamic Layering: Use automation to bring in layers only during specific sections for dramatic builds and breakdowns.

-

6. Layering for Stereo Width

-

-

- Panning: Use layers panned hard left and right for a wide stereo field. Center a lead track for focus.

- Mono Compatibility: Check how layers sum to mono to avoid phase cancellation.

-

7. Advanced Techniques

-

-

- Frequency Layering: Assign layers to cover different frequency ranges. For example:

- Low: Sub-bass.

- Mid: Midrange punch.

- High: Airy or shimmering overtones.

- Blending Samples: Layer sampled instruments with live recordings for a polished yet organic sound.

- Re-amping Layers: Record a clean signal, then re-amp it through various effects or amps for tonal diversity.

- Frequency Layering: Assign layers to cover different frequency ranges. For example:

-

Practical Tips

-

-

- Keep Layers Complementary: Avoid creating “mud” by ensuring that layers complement rather than compete with each other.

- Use EQ to Define Roles: Carve out specific frequency ranges for each layer to maintain clarity.

- Automation: Fade layers in and out dynamically to maintain listener interest.

-

Room Emulation

-

-

-

- Using impulse responses to emulate the acoustics of famous recording spaces, allowing recordings to sound like they were made in iconic studios.

-

-

Room emulation is a technique used in recording and mixing to recreate the acoustics of a particular physical space, giving recordings a sense of depth, realism, or ambiance. It can simulate the sound of famous studios, concert halls, small rooms, or even abstract spaces. Here’s a detailed breakdown:

1. Techniques for Room Emulation

Impulse Responses (IRs)

-

-

- What It Is: An impulse response is a recording of a space’s unique acoustic signature, captured by playing a test signal (like a sine sweep or clap) and recording the reflections and decay.

- How It’s Used:

- Load the IR into a convolution reverb plugin.

- Apply it to audio tracks to simulate the acoustics of the sampled space.

- Examples of spaces include Abbey Road Studio 1, cathedrals, and concert venues.

-

Algorithmic Reverb

-

-

- What It Is: Instead of using pre-recorded acoustics, algorithmic reverbs generate the effect mathematically.

- Benefits:

- Greater flexibility in tweaking parameters like room size, decay, and diffusion.

- Useful for creative or unnatural spaces.

- Plugins like Valhalla Room or Lexicon Reverb are popular choices.

-

Hybrid Approach

-

-

- Some plugins combine convolution and algorithmic reverbs, offering realistic sound with creative flexibility (e.g., FabFilter Pro-R, Waves IR-L).

-

Applications in Recording

Studio-Like Environments

-

-

- When recording in untreated rooms or small home studios, room emulation can add the sound of a high-end recording space.

- Example: Using the IR of a large studio to add subtle room reflections to dry vocals or instruments.

-

Replacing or Enhancing Real Rooms

-

-

- If a room mic captures subpar acoustics, replace or blend it with a high-quality room emulation.

-

Mixing Applications

Adding Depth

-

-

- Apply different room emulations to individual instruments or groups to create a sense of space and separation.

- Example: Drums might use a larger room reverb, while vocals use a smaller, tighter space for intimacy.

- Apply different room emulations to individual instruments or groups to create a sense of space and separation.

-

Creating Cohesion

-

-

- Use a single room emulation across multiple tracks to unify their sound, making them feel recorded in the same space.

-

3D Mixing

-

-

- Combine room emulation with panning and EQ to position sounds within a virtual 3D space.

-

Advanced Room Emulation Techniques

Pre-Delay Control

-

-

- Adjusting pre-delay in the reverb settings can emulate the distance between the sound source and reflective surfaces.

- Longer pre-delay: Simulates a larger room or farther mic placement.

- Shorter pre-delay: Creates a sense of closeness.

- Adjusting pre-delay in the reverb settings can emulate the distance between the sound source and reflective surfaces.

-

Layered Reverbs

-

-

- Combine multiple room emulations for complex spaces:

- Example: Use a small room IR for early reflections and a large hall IR for tail reverberation.

- Combine multiple room emulations for complex spaces:

-

EQ’ing the Reverb

-

-

- Shape the room emulation with EQ:

- High-pass filter: Removes low-end muddiness.

- Low-pass filter: Reduces high-frequency harshness, especially for distant rooms.

- Shape the room emulation with EQ:

-

Dynamic Reverb

-

-

- Use sidechain compression on the reverb signal, ducking it when the dry signal is present. This creates space for the primary sound while retaining room ambiance.

-

Creative Uses of Room Emulation

Unrealistic Spaces

-

-

- Use emulations of massive, unrealistic spaces (e.g., a canyon or a warehouse) for dramatic effects.

- Plugins like Eventide Blackhole specialize in such effects.

- Use emulations of massive, unrealistic spaces (e.g., a canyon or a warehouse) for dramatic effects.

-

Movement and Modulation

-

-

- Automate the parameters of the room emulation to simulate a moving sound source or changing space.

- Example: Increasing room size gradually to give the sense of a sound receding into the distance.

- Automate the parameters of the room emulation to simulate a moving sound source or changing space.

-

Unique IRs

-

-

- Capture impulse responses from unconventional objects or places:

- Example: Inside a car, a metal tube, or a forest for creative sound design.

- Capture impulse responses from unconventional objects or places:

-

Popular Tools for Room Emulation

-

-

- Convolution Reverb Plugins:

- Altiverb (Audio Ease)

- Waves IR1 Convolution Reverb

- Logic Pro Space Designer

- FL Studio Fruity Convolver

- Algorithmic Reverb Plugins:

- Valhalla Room

- FabFilter Pro-R

- Lexicon PCM Native Reverb

- UAD EMT 140 Plate Reverb

- Free Options:

- SIR1 (convolution reverb)

- TAL-Reverb

- Convolution Reverb Plugins:

-

Notes

-

-

- Match the Genre: Choose room emulations that fit the music style (e.g., tight rooms for pop vocals, large halls for orchestral).

- Avoid Overuse: Too much reverb can muddy the mix; use sparingly to maintain clarity.

- Test in Mono: Ensure the reverb doesn’t cause phase issues when summed to Mono.

-

Custom Microphone Placement

-

-

-

- Experimenting with unconventional mic placements to capture unique tones, such as placing microphones behind drums, in adjacent rooms, or extremely close to strings.

-

-

Microphone placement is one of the most critical aspects of recording in a studio. It can drastically impact the tone, clarity, and overall quality of the recording. Here’s an in-depth guide to microphone placement techniques for various instruments, vocals, and creative applications:

1. General Microphone Placement Principles

Distance

-

-

- Close Miking (0–12 inches):

- Captures direct sound with minimal room ambiance. Ideal for isolating instruments or vocals.

- Risks: Proximity effect (increased low-end for directional mics) and plosives.

- Medium Distance (1–3 feet):

- Balances direct sound and room reflections. Common for acoustic instruments.

- Far Distance (3+ feet):

- Captures the sound of the room and the instrument. Used in classical and live recordings.

- Close Miking (0–12 inches):

-

Angle

-

-

- On-Axis:

- Pointing directly at the sound source for clarity and brightness.

- Off-Axis:

- Angled slightly away to reduce harshness or pick up more natural tones.

- On-Axis:

-

Height

-

-

- Experimenting with mic height can emphasize different tonal qualities:

- Higher placement captures more overtones and air.

- Lower placement emphasizes body and warmth.

- Experimenting with mic height can emphasize different tonal qualities:

-

2. Microphone Placement for Specific Instruments

Vocals

-

-

- Standard Placement:

- Place a cardioid condenser mic 6–12 inches away at mouth level. Use a pop filter to reduce plosives.

- Angle:

- Slightly off-axis to minimize sibilance and harshness.

- Creative Variations:

- Overhead for a distant, airy vocal sound.

- Below chin level for a darker, warmer tone.

- Standard Placement:

-

Guitar (Acoustic)

-

-

- 12th Fret Technique:

- Place the mic 6–12 inches from the 12th fret, angled slightly toward the soundhole for balance.

- Soundhole:

- Pointing at the soundhole captures more bass but can sound boomy.

- Stereo Pair:

- Use an X/Y or spaced pair technique to capture stereo depth.

- 12th Fret Technique:

-

Guitar (Electric)

-

-

- Close to Amp Speaker:

- Place a dynamic mic (e.g., Shure SM57) 1–2 inches from the speaker cone.

- Experiment with positioning:

- Center of the Cone: Brighter tone.

- Edge of the Cone: Warmer tone.

- Room Mic:

- Place a condenser mic 3–6 feet back for room ambiance.

- Close to Amp Speaker:

-

Drums

-

-

- Kick Drum:

- Place a mic (e.g., AKG D112) just inside the drum for attack.

- Further back for more low-end resonance.

- Snare:

- Top mic: 1–3 inches above the head, angled toward the center.

- Bottom mic: Captures snare rattle (invert phase when mixing).

- Overheads:

- Use a spaced pair or XY configuration above the kit for a balanced stereo image.

- Room Mics:

- Place condenser mics several feet from the kit for ambiance.

- Kick Drum:

-

Bass

-

-

- Direct Input (DI): Often combined with mic recording.

- Cabinet Mic:

- Place a mic near the speaker cone for natural low-end capture.

- Use a ribbon mic for a smoother tone.

-

Piano

-

-

- Upright Piano:

- Mic near the soundboard or open lid.

- Use spaced pair or single cardioid mic for close capture.

- Grand Piano:

- Spaced pair above the strings for stereo imaging.

- Close-miking the hammers for percussive attack.

- Upright Piano:

-

3. Creative Microphone Placement

Over-the-Shoulder

-

-

- Place a mic over the shoulder of the performer to capture what they hear. Works well for acoustic instruments and vocals.

-

Ambient Miking

-

-

- Use omnidirectional or figure-8 mics to capture room ambiance.

- Blend ambient and close mics for depth.

-

Boundary Mics

-

-

- Place boundary microphones on reflective surfaces (walls, floors) to capture natural reflections.

-

Under Miking

-

-

- Place mics underneath the sound source, such as under drum cymbals or piano strings, for unique tonal perspectives.

-

4. Stereo Microphone Techniques

X/Y (Coincident Pair):

-

-

- Two cardioid mics placed at a 90°–120° angle, capsules as close as possible. Excellent for accurate stereo imaging.

-

ORTF:

-

-

- Two cardioid mics 6.7″ apart at a 110° angle. Creates a wide stereo field with natural spacing.

-

Blumlein:

-

-

- Two figure-8 mics at a 90° angle. Captures direct sound and room reflections.

-

Spaced Pair (A/B):

-

-

- Two identical mics placed apart. Produces a wide stereo image but can cause phase issues.

-

5. Practical Tips

-

-

- Experiment: Small adjustments in position can drastically affect the tone. Take time to test placements.

- Listen: Use headphones to monitor the mic signal as you adjust its position.

- Phase Alignment: Always check for phase issues when using multiple microphones.

- Room Treatment: A well-treated room enhances the effectiveness of mic placement.

- Record Reference Tracks: Capture samples with different placements for comparison.

-

Re-amping (Reamping)

-

-

-

- Recording a clean DI (direct input) signal of an instrument (e.g., guitar plugged directly into the mixing console rather than into a guitar amplifier) and later playing it back through amps or effects pedals to achieve a specific tone.

-

-

Re-amping is a powerful technique used in both recording and mixing that allows you to take a clean, direct signal (often recorded through a DI box) and run it back through an amplifier or other gear to reshape its tone. This method provides flexibility and creative control over the tone and feel of a track after the performance is recorded. Here’s an in-depth look at the technique:

1. How Re-amping Works

Step-by-Step Process

-

-

- Record a Clean DI Track:

- Use a DI box or the direct input of your audio interface to capture a clean, unprocessed signal. This is your “source” for re-amping. A DI box, or direct injection box, is a device that converts an instrument’s unbalanced signal into a balanced signal that can be plugged directly into a mixing console or audio interface. This allows you to record or perform live without using a microphone.

- DI boxes are essential for live performances because of the long cable lengths involved, but they’re also used in recording studios. They’re particularly useful for instruments like electric guitars, basses, and synths, which have unbalanced, high-impedance signals that can pick up noise and degrade over long distances. A DI box converts the signal to a low-impedance, balanced signal that’s compatible with outboard equipment and reduces noise.

- DI boxes come in different types, including passive and active:

- Passive DI boxes

- Use a transformer to balance the signal and create a mic-level output. They don’t require power, so they don’t need a battery or phantom power.

- Active DI boxes

- Use electronic gain stages similar to the input section of a modern instrument amp. They require power from a battery or phantom power from the mixing console.

- More advanced DI boxes may also include features like ground-lift switches, pads, equalization switches, and isolated line outputs.

- Playback Through a Re-amp Box:

- A re-amp box (like the Radial X-Amp) converts the line-level signal from your audio interface back to an instrument-level signal, suitable for amplifiers or effects pedals.

- Send to an Amplifier or Effect:

- Route the signal to a guitar or bass amp, effects chain, or even other analog gear.

- Mic the amp as you would during a live performance to capture the output.

- Record the Re-amped Signal:

- Capture the re-amped sound as a new track in your DAW.

- Record a Clean DI Track:

-

2. Applications of Re-amping

Guitar and Bass

-

-

- Tone Shaping:

- Experiment with different amp settings, speaker cabinets, and mic placements to find the perfect tone.

- Layering:

- Create multiple tonal variations of the same performance by re-amping through different amps or pedals and layering them.

- Tone Shaping:

-

Vocals

-

-

- Character Enhancement:

- Send vocals through guitar amps, distortion pedals, or other processors for a lo-fi or gritty texture.

- Character Enhancement:

-

Drums

-

-

- Snare or Kick Re-amping:

- Re-amp snare or kick tracks through amps or saturation effects to add punch and character.

- Room Feel:

- Send drum tracks to an amp in a live room, then record the ambient reflections.

- Snare or Kick Re-amping:

-

Synths and Keyboards

-

-

- Analog Warmth:

- Re-amp digital synths through tube amps or analog effects to add warmth and character.

- Creative Effects:

- Use guitar pedals or modular effects for unique textures.

- Analog Warmth:

-

3. Creative Re-amping Techniques

Re-amp Through Pedals

-

-

- Use guitar or bass pedals for distortion, delay, modulation, or other effects.

- Chain multiple pedals to create layered textures.

-

Ambient Re-amping

-

-

- Place the amp in a different room (e.g., a stairwell or bathroom) and mic the environment for unique spatial effects.

-

Reverse Re-amping

-

-

- Send reversed audio (e.g., a reversed guitar riff) through an amp or pedal for surreal tones.

-

Parallel Re-amping

-

-

- Blend the clean DI signal with multiple re-amped versions (e.g., one distorted and one clean with reverb) for a rich, layered sound.

-

4. Advantages of Re-amping

-

- Flexibility:

- You can tweak the tone endlessly after the performance, eliminating the need for “perfect” amp settings during the recording session.

- Cost and Efficiency:

- Record the DI at home or in a small studio, then re-amp later with high-end gear or in a professional studio.

- Focus on Performance:

- Allows the performer to focus on playing without worrying about tone settings during tracking.

- Consistency:

- The performance stays the same while you explore different tonal options.

- Flexibility:

5. Tools for Re-amping

Re-amp Boxes

-

-

- Convert the line-level signal from your DAW to instrument level:

- Radial X-Amp or ProRMP

- Little Labs Redeye

- Palmer Daccapo

- Convert the line-level signal from your DAW to instrument level:

-

DI Boxes

-

-

- Record the clean signal during tracking:

- Radial J48

- Countryman Type 85

- Behringer Ultra-DI

- Record the clean signal during tracking:

-

Interfaces with Built-In Re-amping

-

-

- Some audio interfaces (e.g., Universal Audio Apollo) include re-amping-friendly outputs.

-

6. Best Practices

-

- Record a Clean DI Track:

- Always record a clean DI track when working with guitar, bass, or any instrument that might benefit from re-amping. It gives you options later.

- Watch Your Gain Staging:

- Ensure proper gain levels when sending the signal to the re-amp box and amp to avoid distortion or noise.

- Phase Alignment:

- Check for phase issues when combining the DI and re-amped tracks. Use phase alignment tools or manual adjustment if necessary.

- Experiment with Mic Placement:

- Treat the re-amping session like a live recording session. Try different mic positions and combinations for varied tones.

- Record Multiple Takes:

- Use different amp settings, mics, or effects during each pass to create a palette of sounds.

- Record a Clean DI Track:

7. Challenges and Solutions

-

-

- Noise or Hum:

- Use balanced cables and ensure proper grounding to minimize interference.

- Latency:

- Compensate for any latency introduced by the re-amping signal chain in your DAW.

- Limited Gear:

- If you don’t have a re-amp box, you can use a passive DI box in reverse, though results may vary.

- Noise or Hum:

-

Mixing Techniques

Parallel Processing

-

-

-

- Using parallel compression, distortion, or EQ to blend processed and unprocessed signals for a punchier or more dynamic mix.

-

-

Parallel Processing in Audio Production

Parallel processing is a versatile mixing technique where a copy of an audio signal is processed independently and then blended with the original signal. This allows for a more dynamic, detailed, and nuanced sound without overly affecting the integrity of the source material. Here’s an in-depth look at parallel processing:

1. The Basics of Parallel Processing

How It Works

-

-

- The original audio signal remains unprocessed or minimally processed.

- A duplicate signal (or “parallel chain”) is processed separately, often with heavy effects or extreme settings.

- Both signals are blended to achieve the desired effect.

-

Why Use Parallel Processing?

-

-

- Retains the natural character of the original signal while adding enhancements.

- Avoids over-processing, which can sound unnatural.

- Allows precise control over the intensity of effects.

-

2. Common Uses of Parallel Processing

Compression (Parallel Compression)

-

-

- What It Does: Adds thickness, punch, and sustain without crushing the dynamics of the original track.

- How to Use It:

- Send a copy of the signal to an auxiliary channel.

- Apply heavy compression (e.g., a low threshold, high ratio, fast attack, and release).

- Blend the compressed signal with the uncompressed signal to taste.

- Applications:

- Drums: Add punch to kick and snare while retaining transient detail.

- Vocals: Increase presence and sustain while keeping natural dynamics.

- Bass: Enhance low-end sustain without losing clarity.

-

EQ

-

-

- What It Does: Boosts or cuts specific frequencies on the parallel signal for tonal shaping without altering the source.

- How to Use It:

- Use EQ to emphasize a specific frequency range (e.g., boosting high-end shimmer on vocals).

- Blend the EQ’d signal with the original for subtle enhancements.

-

Reverb

-

-

- What It Does: Adds space and ambiance without overwhelming the source.

- How to Use It:

- Send the signal to an auxiliary channel.

- Apply reverb to the parallel track and adjust the wet/dry blend.

- Blend with the dry signal for a balanced mix of presence and space.

- Applications:

- Vocals: Create lush, spacious sounds while keeping intelligibility.

- Drums: Add room sound without losing punch.

-

Distortion/Saturation

-

-

- What It Does: Adds harmonic richness, grit, or warmth without over-saturating the original track.

- How to Use It:

- Apply distortion or saturation to the parallel track.

- Blend with the original signal to add texture and weight.

- Applications:

- Vocals: Add grit for rock or lo-fi styles.

- Bass: Add midrange harmonics for clarity in dense mixes.

- Drums: Add warmth and character to shells or cymbals.

-

Delay

-

-

- What It Does: Creates echo effects without muddying the original signal.

- How to Use It:

- Apply delay to a parallel track and adjust feedback and mix levels.

- Blend the delayed signal subtly for depth or prominently for creative effects.

- Applications:

- Vocals: Add rhythmic echoes or subtle ambiance.

- Guitars: Create wide, spacious textures.

-

3. Advanced Parallel Processing Techniques

Multiband Parallel Processing

-

-

- What It Does: Split the audio into frequency bands and process each band independently.

- How to Use It:

- Use multiband compressors or EQ to isolate low, mid, and high frequencies.

- Apply effects (e.g., compression, saturation) to individual bands.

- Blend the processed bands with the original signal.

- Applications:

- Bass: Compress the low end for control while leaving the midrange untouched.

- Vocals: Add air to the highs without affecting the mids or lows.

-

Transient Shaping

-

-

- What It Does: Enhances or softens attack and sustain characteristics.

- How to Use It:

- Apply transient shaping to the parallel signal.

- Blend it with the original for a punchy yet natural result.

- Applications:

- Drums: Increase attack for more snap or sustain for roominess.

- Percussion: Enhance clarity and definition.

-

Dynamic Parallel Effects

-

-

- What It Does: Apply effects that react dynamically to the original signal.

- How to Use It:

- Use sidechain compression, gating, or dynamic EQ on the parallel track.

- Trigger effects based on the dynamics of the original signal.

- Applications:

- Reverb: Use sidechain compression to reduce reverb during loud sections and increase it during quiet sections.

- Delay: Automate delay intensity based on vocal dynamics.

-

Creative Blending

-

-

- Experiment with unconventional combinations:

- Blend distorted and clean vocals for a modern pop/rock edge.

- Add heavily modulated synth layers beneath clean lines for richness.

- Use a reverse reverb or delay on the parallel chain for ethereal effects.

- Experiment with unconventional combinations:

-

4. Workflow Tips

-

-

- Auxiliary Sends vs. Duplicate Tracks:

- Use auxiliary sends for easy control and adjustments.

- Duplicate tracks if you need to edit or automate parallel processing independently.

- Blend Carefully:

- Start with the processed signal at zero and gradually bring it in until it complements the original.

- Phase Alignment:

- Check for phase issues between the original and parallel signals, especially when duplicating tracks.

- Automation:

- Automate the parallel signal’s level or effect parameters to create dynamic changes in the mix.

- Layering with Intent:

- Use parallel processing to enhance specific mix elements without overcomplicating the soundscape.

- Auxiliary Sends vs. Duplicate Tracks:

-

5. Example Parallel Processing Chains

Drum Parallel Compression Chain:

-

-

- Dry Track: Unprocessed drums for natural dynamics.

- Parallel Track:

- Heavy compression (fast attack/release).

- Subtle saturation for warmth.

- High-pass filter to avoid low-end buildup.

-

Vocal Chain with Parallel Reverb and Distortion:

-

-

- Dry Track: Clean vocal for clarity.

- Parallel Track 1:

- Hall reverb with a high-pass filter to keep it airy.

- Parallel Track 2:

- Distortion or saturation for grit.

- Blend: Adjust levels of both parallel tracks to add depth and character.

-

Subtractive EQ

-

-

-

- Carving out frequencies from instruments to make room for others, ensuring a cleaner mix. For instance, cutting low frequencies in guitars to allow the bass guitar to shine.

-

-

Subtractive EQ in Audio Production

Subtractive EQ is a technique where you remove (attenuate) specific frequencies from an audio signal to achieve clarity, balance, and a cleaner mix. Instead of boosting desired frequencies, subtractive EQ removes problematic or unnecessary ones, leaving space for other elements in the mix. Here’s a detailed exploration:

1. Why Use Subtractive EQ?

-

-

- Clarity: Removes muddiness, harshness, or unwanted resonances.

- Headroom: Frees up space in the mix, reducing masking effects and improving dynamics.

- Transparency: By cutting rather than boosting, subtractive EQ minimizes the risk of unnatural sounds and avoids emphasizing noise or distortion.

- Frequency Balance: Makes room for other elements to sit better in the mix, creating a cohesive soundscape.

-

2. Common Frequency Ranges for Subtractive EQ

Low-End (20–200 Hz)

-

-

- Muddiness: Often found around 100–250 Hz in instruments like guitars, pianos, and vocals.

- Low-Rumble: Remove subsonic noise (20–40 Hz) using a high-pass filter.

- Kick vs. Bass: Attenuate frequencies in one to make room for the other (e.g., cut around 80 Hz in the bass if the kick occupies that range).

-

Low-Mid Range (200–800 Hz)

-

-

- Boxiness: Particularly in drums, vocals, and guitars, boxy or hollow tones reside around 300–500 Hz.

- Woofiness: Overpowering low-mids in bass-heavy tracks can be controlled with cuts in this range.

-

High-Mid Range (2–5 kHz)

-

-

- Harshness: Harsh, nasal, or biting qualities in vocals and instruments can often be found between 2–4 kHz.

- Clutter: Overlapping frequencies between guitars, keyboards, and vocals can muddy this range. Surgical cuts can improve separation.

-

High-End (5–20 kHz)

-

-

- Sibilance: Found in vocals around 5–8 kHz (use a de-esser or EQ cut).

- Hiss/Noise: High-frequency noise or excessive brightness may need attenuation above 10 kHz.

-

3. Techniques for Subtractive EQ

Identify Problematic Frequencies

-

-

- Sweep-and-Cut Method:

- Use a narrow Q (bandwidth) and boost a band by 6–12 dB.

- Sweep the frequency range to identify resonances or harsh tones.

- Cut the problematic frequency by reducing the gain.

- Visual Analysis:

- Use spectrum analyzers (e.g., FabFilter Pro-Q, iZotope Neutron) to identify peaks or unwanted resonances visually.

- A/B Listening:

- Toggle the EQ on and off while listening to confirm the improvement.

- Sweep-and-Cut Method:

-

Use High-Pass and Low-Pass Filters

-

-

- High-Pass Filter:

- Removes low-end rumble or unnecessary bass frequencies. Set the cutoff just below the lowest fundamental frequency of the instrument.

- Common for vocals, guitars, and even drums (except kick and bass).

- Low-Pass Filter:

- Removes high-frequency noise or harshness. Useful for taming cymbals, electric guitars, or synths.

- High-Pass Filter:

-

Target Resonances

-

-

- Resonances occur when certain frequencies are overly emphasized, often due to room acoustics, mic placement, or instrument characteristics.

- Use narrow cuts (high Q) to attenuate these without affecting surrounding frequencies.

-

Subtractive EQ Before Compression

-

-

- Applying EQ cuts before compression avoids the compressor overreacting to problematic frequencies, resulting in more balanced dynamics.

-

Tame Overlapping Frequencies

-

-

- Use subtractive EQ to create space between competing instruments.

- Example: If a vocal clashes with a guitar, reduce frequencies in the guitar track around 2–4 kHz, where vocal intelligibility resides.

- Use subtractive EQ to create space between competing instruments.

-

4. Practical Applications of Subtractive EQ

Vocals

-

-

- High-Pass Filter: Remove low-end rumble or mic noise below 80–100 Hz.

- Muddiness: Cut 200–400 Hz to reduce boxiness.

- Sibilance: Attenuate harsh “S” sounds at 5–8 kHz with a narrow band or de-esser.

-

Drums

-

-

- Kick Drum:

- Cut 300–500 Hz to reduce boxiness.

- Use a high-pass filter below 20–40 Hz to remove unnecessary subsonics.

- Snare: Cut around 400–600 Hz to clean up muddiness; cut 2–3 kHz if overly harsh.

- Overheads: High-pass filter around 150–200 Hz to remove low-end bleed.

- Kick Drum:

-

Bass

-

-

- Low-End Control: Use a high-pass filter to remove frequencies below 30–40 Hz.

- Mud Reduction: Cut 200–400 Hz to avoid conflict with other instruments.

-

Guitar

-

-

- Electric Guitar:

- Cut 200–300 Hz to reduce mud.

- Use a low-pass filter around 8–10 kHz to tame hiss or harshness.

- Acoustic Guitar:

- High-pass below 80 Hz to remove low-end rumble.

- Cut 500–800 Hz for boxiness.

- Electric Guitar:

-

Synths (Synthesizers)

-

-

- Remove unnecessary low-end with a high-pass filter.

- Cut 2–4 kHz if the synth clashes with vocals or guitars.

-

Mix Bus

-

-

- Subtractive EQ can be used on the entire mix:

- High-pass filter below 20–30 Hz to remove inaudible sub-bass.

- Subtle cuts around 200–400 Hz to clean up muddiness.

- Reduce harshness at around 2–4 kHz for a smoother mix.

- Subtractive EQ can be used on the entire mix:

-

5. Tools for Subtractive EQ

-

-

- Parametric EQ:

- Precise frequency targeting. Examples:

- FabFilter Pro-Q 3

- Waves Q10

- Logic Pro Channel EQ

- Precise frequency targeting. Examples:

- Dynamic EQ:

- Attenuates frequencies dynamically, only when necessary. Examples:

- iZotope Neutron

- TDR Nova

- Attenuates frequencies dynamically, only when necessary. Examples:

- Linear-Phase EQ:

- Avoids phase shift, suitable for mastering or when transparency is critical. Examples:

- FabFilter Pro-Q in linear-phase mode

- MAAT Linear Phase EQ

- Waves Linear Phase EQ

- Avoids phase shift, suitable for mastering or when transparency is critical. Examples:

- Parametric EQ:

-

6. Tips for Effective Subtractive EQ

-

-

- Cut Narrowly, Boost Broadly:

- When cutting, use a narrow Q to target specific problems.

- For broad tonal adjustments, boosting with a wider Q is more natural.

- Cut, Don’t Boost:

- Start with subtractive EQ to remove issues before boosting.

- Trust Your Ears:

- While visual tools are helpful, rely on your ears to make final decisions.

- Don’t Overdo It:

- Too many cuts can thin out the sound. Use EQ sparingly and only where necessary.

- Context Matters:

- Always EQ in the context of the full mix, not in solo, to ensure each element complements the others.

- Cut Narrowly, Boost Broadly:

-

Frequency Slotting

-

-

- Strategically assigning specific frequency ranges to each instrument to prevent masking and improve clarity.

-

Frequency Slotting in Audio Production

Frequency slotting is a mixing technique that ensures each element in a mix has its own “space” in the frequency spectrum. By carefully carving out or enhancing specific frequency ranges for different instruments, you avoid masking (when one sound obscures another) and achieve a cleaner, more balanced mix.

1. Why Use Frequency Slotting?

-

-

- Clarity: Prevents instruments from competing for the same frequency space.

- Separation: Makes each element distinct while maintaining cohesion.

- Balance: Distributes energy across the frequency spectrum, avoiding muddiness or harshness.

- Mix Space Efficiency: Allows each element to contribute without overcrowding the mix.

-

2. The Frequency Spectrum Overview

Each element in your mix occupies specific frequency ranges:

-

-

- Sub-Bass (20–60 Hz): Felt rather than heard (kick, bass synths).

- Bass (60–250 Hz): Low-end power and warmth (bass guitar, kick).

- Low-Mids (250–500 Hz): Body and warmth (guitars, piano, vocals).

- Mids (500–2000 Hz): Clarity and presence (vocals, guitars, snares).

- High-Mids (2–6 kHz): Definition and intelligibility (vocals, cymbals, strings).

- Highs (6–20 kHz): Air and sparkle (hi-hats, synths, vocals).

-

3. How Frequency Slotting Works

-

-

- Identify the Key Frequencies:

- Determine the dominant frequency ranges of each instrument.

- For example, a bass guitar may dominate in the 60–200 Hz range, while a kick drum occupies 40–80 Hz.

- Cut or Boost Strategically:

- Reduce frequencies in one instrument to make room for another.

- Boost the desired frequencies of one instrument to emphasize its character.

- Use Complementary EQ:

- Make reciprocal EQ adjustments to related instruments.

- For example, attenuate 300 Hz in the bass to emphasize the kick drum and boost 80 Hz in the kick to accentuate its punch.

- Avoid Overlapping Frequencies:

- Instruments that share similar frequency ranges (e.g., guitars and vocals) should be adjusted to minimize masking.

- Identify the Key Frequencies:

-

4. Practical Applications of Frequency Slotting

Kick Drum and Bass

-

-

- Problem: Low-end clutter.

- Solution:

- Decide which element dominates specific frequencies.

- Example:

- Boost the kick at 60 Hz and cut the bass there.

- Boost the bass at 120 Hz and cut the kick there.

-

Vocals and Guitars

-

-

- Problem: Vocals getting masked by midrange-heavy guitars.

- Solution:

- Boost 2–4 kHz in vocals for intelligibility.

- Attenuate 2–4 kHz in the guitars to make room for the vocals.

-

Drums and Cymbals

-

-

- Problem: Cymbals overpowering snare or toms.

- Solution:

- High-pass filter cymbals around 300–400 Hz to reduce low-end bleed.

- Boost snare in the 2–5 kHz range for snap.

-

Piano and Strings

-

-

- Problem: Overlapping harmonic content in the mids.

- Solution:

- Use EQ to carve out space for each instrument’s fundamental tones.

- Example:

- Boost 1–2 kHz for piano clarity.

- Attenuate 1–2 kHz in strings to avoid masking.

-

5. Tools for Frequency Slotting

EQ

-

-

- Parametric EQ: Offers precision and flexibility.

- Examples: FabFilter Pro-Q, Waves Q10, Logic Pro EQ.

- Dynamic EQ: Attenuates only when problematic frequencies become prominent.

- Examples: iZotope Neutron, TDR Nova.

- Parametric EQ: Offers precision and flexibility.

-

Frequency Analyzers

-

-

- Visualize overlapping frequencies.

- Examples: SPAN by Voxengo, iZotope Insight, FabFilter Pro-Q Spectrum Analyzer.

- Visualize overlapping frequencies.

-

Multiband Processing

-

-

- Apply EQ, compression, or effects to specific frequency bands.

- Examples: Waves C6, iZotope Ozone.

- Apply EQ, compression, or effects to specific frequency bands.

-

Panning

-

-

- Move elements to different parts of the stereo field to complement frequency adjustments.

-

6. Workflow Tips

1. Start with High-Pass Filters

-

-

- Remove unnecessary low-end from non-bass elements like guitars, vocals, and synths. This clears space for kick and bass instruments.

-

2. Prioritize the Mix Hierarchy

-

-

- Determine which elements are the focal points of the mix (e.g., vocals in a pop song, kick, and bass in electronic music).

- Slot other instruments around these priority elements.

-

3. Use Subtractive EQ First

-

-

- Cut problematic frequencies before boosting desired ones. This approach is more transparent and avoids overcrowding the mix.

-

4. Use Complementary EQ

-

-

- When boosting in one track, consider cutting the same range in another.

- Example:

- Boost 3 kHz in vocals for presence.

- Cut 3 kHz in guitars to make room.

-

5. Automate Frequency Adjustments

-

-

- In dynamic mixes, use automation to adjust EQ settings for specific sections (e.g., lowering guitar midrange during vocal passages).

-

6. Check-in Context

-

-

- Always make EQ adjustments while listening to all elements in the mix. Soloing tracks can mislead your decisions.

-

7. Advanced Frequency Slotting Techniques

Multiband Slotting

-

-

- Split instruments into frequency bands and process each band independently.

- Example: Use multiband compression to tame the low mids in a muddy bass guitar while leaving the highs intact.

- Split instruments into frequency bands and process each band independently.

-

Sidechain EQ

-

-

- Use sidechain compression with an EQ filter to dynamically reduce masking.

- Example: Sidechain a bass track to duck its 60 Hz range when the kick drum hits.

- Use sidechain compression with an EQ filter to dynamically reduce masking.

-

Mid/Side Processing

-

-

- Use EQ to process the mid (center) and side (stereo) components of a mix separately.

- Example: Boost midrange vocals in the center while attenuating overlapping frequencies in side-panned guitars.

- Use EQ to process the mid (center) and side (stereo) components of a mix separately.

-

Parallel EQ

-

-

- Apply EQ on a duplicate signal and blend it with the original to emphasize or de-emphasize specific frequencies.

-

8. Example Frequency Ranges for Common Instruments

| Instrument | Key Frequency Ranges |

| Kick Drum | Sub-bass (40–60 Hz), punch (100–150 Hz), click (2–4 kHz). |

| Snare Drum | Body (150–250 Hz), crack (2–5 kHz), air (8–12 kHz). |

| Bass Guitar | Fundamentals (60–100 Hz), warmth (120–250 Hz), clarity (700 Hz). |

| Electric Guitar | Body (150–300 Hz), crunch (1–3 kHz), air (8–10 kHz). |

| Vocals | Warmth (150–300 Hz), presence (2–5 kHz), sibilance (5–8 kHz). |

| Piano | Body (200–500 Hz), clarity (2–4 kHz), air (8–12 kHz). |

Automated Volume Rides

-

-

-

- Setting up automating volume changes throughout a track to emphasize certain elements and ensure dynamic balance.

-

-

Automated volume rides involve dynamically adjusting the volume of individual tracks or sections in a mix using automation tools. This technique ensures a more expressive, balanced, and professional-sounding mix by enhancing dynamics and clarity. It’s particularly useful for vocals, solos, or other elements that need to remain prominent or consistent throughout the mix.

1. Why Use Automated Volume Rides?

-

-

- Dynamic Balance: Smooth out uneven performances or varying loudness levels.

- Expressiveness: Highlight emotional or important moments in the mix.

- Clarity: Keep key elements (e.g., vocals) consistently audible without over-compressing.

- Transparency: Achieve a polished mix without relying heavily on compression, which can color the sound.

-

2. Tools for Volume Automation

-

-

- DAW Automation Lanes:

- Most DAWs (Logic Pro, Pro Tools, Ableton Live, etc.) provide automation lanes for precise volume adjustments.

- Fader Automation:

- Automate fader movements manually or programmatically.

- Plugins:

- Specialized tools like Waves Vocal Rider or iZotope Neutron automate volume rides in real-time.

- Control Surfaces:

- Use hardware controllers (e.g., Avid S1, Behringer X-Touch) for tactile, real-time adjustments.

- DAW Automation Lanes:

-

3. Common Applications

Vocals

-

-

- Purpose: Ensure consistent vocal presence without over-compression.

- Example:

- Raise the volume during quiet phrases to keep them intelligible.

- Lower the volume of loud peaks to prevent them from overwhelming the mix.

-

Lead Instruments

-

-

- Purpose: Keep solos (e.g., guitar, piano) prominent during their sections.

- Example:

- Boost the lead guitar during a solo, then lower it as it transitions back to a rhythm role.

-

Dialog and Voiceovers

-

-

- Purpose: Ensure speech is clear and intelligible.

- Example:

- Gently raise quieter words or phrases without compressing the entire signal.

-

Drums

-

-

- Purpose: Add energy and dynamics by emphasizing key drum hits.

- Example:

- Highlight specific snare hits or cymbal crashes during transitions.

-

Bass

-

-

- Purpose: Maintain a consistent low-end presence.

- Example:

- Boost bass during softer parts of the mix, ensuring it’s felt but not overpowering.

-

Full Mix (Master Automation)

-

-

- Purpose: Enhance the dynamics of the entire song.

- Example:

- Raise the overall mix volume slightly during choruses for added impact.

-

4. Workflow for Automating Volume Rides

Step 1: Identify Problem Areas

-

-

- Listen critically to the mix.

- Identify moments where:

- Vocals dip below the instrumentation.

- Key elements (e.g., solos) lose prominence.

- Transitions lack energy.

-

Step 2: Enable Automation

-

-

- Activate volume automation for the desired track(s).

- In most DAWs, this involves switching the track to “Write,” “Touch,” or “Latch” automation mode.

- Activate volume automation for the desired track(s).

-

Step 3: Perform Initial Automation

-

-

- Use faders or draw automation curves to adjust volume dynamically.

- For vocals, manually ride the fader during playback to balance dynamics in real-time.

- Use faders or draw automation curves to adjust volume dynamically.

-

Step 4: Fine-Tune Automation

-

-

- Edit automation curves for precision:

- Use smooth ramps for gradual changes.

- Create sharp nodes for sudden transitions (e.g., mutes or dramatic level changes).

- Edit automation curves for precision:

-

Step 5: Adjust in Context

-

-

- Always automate while listening to the entire mix, not in solo mode.

- Make subtle adjustments to avoid unnatural fluctuations.

-

Step 6: Test with Automation Modes

-

-

- Touch Mode: Automation writes only while the fader is touched.

- Latch Mode: Automation continues writing until playback stops.

- Write Mode: Overwrites all existing automation data during playback.

-

5. Techniques for Effective Volume Rides

1. Ride the Wave

-

-

- Increase volume slightly for emotional phrases or crescendos.

- Lower volume during instrumental breaks or dense sections.

-

2. Focus on Transitions

-

-

- Smooth out volume jumps at section changes (e.g., verse to chorus).

-

3. Enhance Nuance

-

-

- Emphasize subtle details like breaths, inflections, or quiet background effects.

-

4. Use Automation with Compression

-

-

- Combine subtle volume rides with light compression to retain dynamics while controlling peaks.

-

5. Automate Post-Effects

-

-

- Apply automation after dynamic effects like compression, EQ, or saturation for better control.

-

6. Advanced Tips

Parallel Volume Rides

-

-

- Automate the volume of a duplicate track processed differently (e.g., a compressed parallel vocal) to blend in only when needed.

-

Automating Reverbs and Delays

-

-

- Ride send levels to emphasize reverb or delay tails during quieter sections or fades.

-

Multiband Automation

-

-

- Use multiband compressors with automation to dynamically control specific frequency ranges (e.g., taming vocal harshness at 2–4 kHz during loud phrases).

-

Automate Sidechain Triggers

-

-

- Adjust sidechain compression thresholds dynamically for more natural ducking.

-

Volume Rides for Genre Dynamics

-

-

- In EDM or Pop: Gradually increase volume before a drop or chorus for impact.

- In Classical or Jazz: Automate dynamics to replicate live performance nuances.

-

7. Automation Techniques by Genre

| Genre | Volume Ride Goals |

| Pop | Keep vocals upfront and automate choruses for impact. |

| Rock | Emphasize solos and transitions; control cymbals and snares. |

| Hip-Hop | Ensure vocal intelligibility; automate bass for groove. |

| EDM | Build tension with gradual volume increases before drops. |

| Classical | Mimic live dynamics; automate orchestra swells and solo passages. |

| Film Scoring | Highlight emotional cues; control dialog, effects, and score balance. |

8. Example Workflow for Vocals

-

-

- Identify Problem Areas:

- Look for words or phrases buried in the mix.

- Create Automation Nodes:

- Place keyframes around each word or phrase needing adjustment.

- Adjust Volume:

- Raise quieter words by 1–3 dB.

- Gently lower louder phrases by 1–2 dB.

- Smooth Transitions:

- Use gradual ramps to avoid abrupt changes.

- A/B Test:

- Compare before and after automation to ensure improvements are natural.

- Identify Problem Areas:

-

Mastering Techniques

Mid/Side Processing

-

-

-

- Adjusting the stereo width by independently processing the “mid” (center) and “side” (stereo) channels for a wider or more focused sound.

-

-

Mid/Side processing is a technique that allows you to manipulate the “Mid” (center) and “Side” (stereo) components of a signal separately. This is especially useful in mixing and mastering for controlling stereo width, enhancing focus, and fixing spatial imbalances. Below is a comprehensive guide to understanding and using M/S processing effectively.

1. What is Mid/Side (M/S) Processing?

In a stereo signal:

-

-

- Mid (M): The mono information, shared equally by the left and right channels (center elements like vocals, bass, kick drum, snare).

- Side (S): The stereo information, or the differences between the left and right channels (reverbs, panned instruments, room ambiance).

-

By splitting audio into Mid and Side components, you can adjust the spatial characteristics of a track or mix without affecting the entire stereo image equally.

2. How M/S Works

Encoding

-

-

- A stereo signal is encoded into Mid/Side:

- Mid = (Left + Right) ÷ 2

- Side = (Left – Right) ÷ 2

- A stereo signal is encoded into Mid/Side:

-

Processing

-

-

- You apply EQ, compression, reverb, or other effects to the Mid or Side components independently.

-

Decoding

-

-

- The Mid/Side signal is converted back into stereo:

- Left = Mid + Side

- Right = Mid – Side

- The Mid/Side signal is converted back into stereo:

-

3. Tools for Mid/Side Processing

-

-

- Plugins:

- EQ: FabFilter Pro-Q, iZotope Ozone EQ, Waves H-EQ.

- Compression: Waves Center, FabFilter Pro-C, Brainworx bx_opto.

- Stereo Enhancement: iZotope Ozone Imager, Waves S1, Brainworx bx_control.

- Reverb: Valhalla Room, FabFilter Pro-R with M/S capabilities.

- DAWs:

- Many DAWs (e.g., Logic Pro, Ableton Live, Cubase) allow M/S routing natively or with plugins.

- Hardware:

- Some high-end mastering hardware offers M/S controls for analog signal chains.

- Plugins:

-

4. Applications of Mid/Side Processing

1. Enhancing Stereo Width

-

-

- Boost Side Frequencies: Increase the level of Side components to widen the stereo image.

- Example: Boost high frequencies in the Side to make panned guitars or cymbals more spacious.

- Cut Mid Frequencies: Reduce Mid frequencies to create a sense of openness.

- Boost Side Frequencies: Increase the level of Side components to widen the stereo image.

-

2. Focusing the Mix Center

-

-

- Boost Mid Frequencies: Enhance clarity and focus on center elements like vocals or bass.

- Cut Side Frequencies: Remove unnecessary stereo information that may clutter the mix.

-

3. Controlling Reverb and Ambience

-

-

- Use M/S EQ to manage reverb tails:

- Example: Cut low frequencies in the Side to clean up muddy reverb while leaving the Mid untouched.

- Use M/S EQ to manage reverb tails:

-

4. Tightening the Low End

-

-

- Mono Bass: Cut low frequencies in the Side channel (below 100 Hz) to keep the bass focused in the center.

- Ensures better playback on systems with mono subwoofers.

- Mono Bass: Cut low frequencies in the Side channel (below 100 Hz) to keep the bass focused in the center.

-

5. Correcting Stereo Imbalances

-

-

- Panned Instruments: Use M/S EQ to address tonal imbalances in Side components.

- Example: Boost 1–3 kHz in the Side to enhance panned guitars.

- Panned Instruments: Use M/S EQ to address tonal imbalances in Side components.

-

6. Mastering Adjustments

-

-

- Stereo Width Control: Subtly widen the mix by boosting Side frequencies or narrowing by boosting Mid.

- Fixing Problems: Use M/S EQ to reduce harshness in Side frequencies (e.g., cymbals) without affecting Mid-clarity.

-

5. Mid/Side EQ Techniques

1. Brightening the Stereo Image

-

-

- Boost high frequencies (8–12 kHz) in the Side to add air and sparkle to stereo elements like cymbals and effects.

-

2. Cleaning the Low-End

-

-

- Apply a high-pass filter to the Side below 80–120 Hz to keep low frequencies centered.

-

3. Enhancing Vocal Presence

-

-

- Boost 2–5 kHz in the Mid to make vocals stand out.

- Cut the same range in the Side to reduce distractions.

-

4. Reducing Harshness

-

-

- Identify harsh frequencies (e.g., 3–5 kHz) in the Side and attenuate them to smooth out the stereo field.

-

6. Mid/Side Compression Techniques

1. Center Focus

-

-

- Apply compression to the Mid to control the dynamics of central elements like vocals and drums.

-

2. Stereo Expansion

-

-

- Use lighter compression on the Side to enhance the dynamic contrast of stereo elements, making the mix feel wider.

-

3. Balancing Levels

-

-

- Compress the Side channel to reduce overly dynamic stereo effects and create a more cohesive mix.

-

7. Reverb and Delay with M/S Processing

Mid/Side Reverb

-

-

- Apply reverb only to the Side to enhance space without muddying the Mid.

- Example: Keep the vocal dry in the Mid and apply reverb to the Side for a wide, ambient effect.

-

Mid/Side Delay

-

-

- Use stereo delays with M/S processing:

- Mid: Subtle delay for clarity and focus.

- Side: Wider delay with modulation for a lush stereo effect.

- Use stereo delays with M/S processing:

-

8. Practical Workflow for M/S Processing

Step 1: Analyze the Mix

-

-

- Use a frequency analyzer or stereo meter to identify issues with the stereo field or specific frequency ranges.

-

Step 2: Apply M/S Effects

-

-

- EQ:

- Tame problematic frequencies in the Side (e.g., harshness, mud).

- Enhance clarity in the Mid (e.g., vocals, snare).

- Compression:

- Tighten the Mid dynamics for better focus.

- Subtly control or enhance Side dynamics for width.

- EQ:

-

Step 3: Fine-Tune the Stereo Width

-

-

- Use stereo imaging tools to adjust Side levels dynamically, ensuring the mix feels open but not overly wide.

-

Step 4: Monitor in Mono and Stereo

-

-

- Regularly switch between mono and stereo playback to ensure the adjustments translate well across systems.

-

9. Tips for Effective M/S Processing

-

-

- Be Subtle: Drastic changes can make the mix sound unnatural or disjointed.

- Focus on Context: Always adjust M/S settings while listening to the entire mix.

- Match the Genre: Wide, spacious mixes suit ambient or EDM, while tighter, centered mixes suit rock or classical.

- Check for Phase Issues: Excessive Side boosts can lead to phase problems when played in mono.

-

Saturation and Harmonics

-

-

-

- Adding subtle harmonic distortion through tape emulation or saturation plugins to add warmth and character.

-

-

Digital recording studios often add harmonic distortion and saturation to tracks to emulate the warmth, richness, and character traditionally associated with analog equipment. These effects are used creatively or subtly to enhance a recording. Here are some common techniques and tools:

1. Using Saturation Plugins

Saturation plugins emulate the harmonic distortion introduced by analog tape machines, tube amplifiers, or transformers.

-

-

- Tape Saturation: Plugins like Universal Audio’s Studer A800, Waves’ J37, or Softube’s Tape replicate the slight compression, soft clipping, and frequency shaping of analog tape.

- Tube Saturation: Tube emulation plugins like FabFilter’s Saturn 2, Soundtoys’ Radiator, or Black Rooster Audio’s VPRE-73 add warmth by introducing even-order harmonics.

- Solid-State Saturation: Some plugins emulate the subtle distortion from solid-state circuits, offering a cleaner and tighter saturation.

-

2. Parallel Saturation

This involves blending a processed (saturated) signal with the original clean signal, retaining clarity while adding warmth.

-

-

- Create a duplicate track or use a plugin with a mix knob to adjust the balance between dry and wet signals.

-

3. Adding Harmonic Exciters

Harmonic exciters add subtle harmonic overtones to enhance clarity and presence. They are often used to brighten tracks without boosting EQ excessively.

-

-

- Popular tools: iZotope’s Exciter in Ozone, Waves’ Aphex Vintage Aural Exciter.

-

4. Clipping for Harmonic Content

Soft or hard clipping is used to shape transients and add harmonics. Soft clipping can emulate the behavior of analog gear, while hard clipping creates more pronounced distortion.

-

-

- Tools like StandardCLIP, FabFilter’s Pro-L 2, or Ableton’s built-in Saturator can be adjusted for various levels of clipping.

-

5. Dynamic Saturation

Applying saturation only to specific dynamic ranges, such as louder transients or peaks, helps retain a clean signal while adding color during intense moments.

-

-

- Many compressors, like the Klanghelm MJUC or plugins with saturation options, apply harmonic distortion dynamically.

-

6. Multi-Band Saturation

Applying saturation to specific frequency bands allows precise control over which parts of the audio spectrum get enhanced.

-

-

- Multi-band plugins like FabFilter’s Saturn 2, iZotope’s Trash 2, or Melda’s MSaturatorMB let you apply saturation to selected ranges, e.g., boosting the midrange for vocals or adding grit to the low end for bass.

-

7. Overdriving Preamps or Console Emulations

Plugins emulating analog consoles or preamps introduce natural harmonic distortion and saturation when driven hard.

-

-