|

Introduction

We have received a

lot of feedback from the DVD Benchmark and Progressive Scan Shootouts. Some

of it has been positive and some of it not, but surprisingly, the majority of responses

from manufacturers have been positive. The people most upset by our findings are those who

own players that did not fare well in our tests. A link to the shootouts

database,

including our latest Shootout (January, 2003) is at the bottom of this

page, immediately below "Test Results". You can search the database using criteria that are important to you.

Please let us know if you like it and how we can improve it. No one else has something like this, so it is a learning experience for us

too.

Our Vision

The progressive

scan shootout results are not based on features, cost,

build quality, or

audio performance. We're primarily concerned with DVD players

as video playback devices. We're not trying to do a DVD-A or SACD

comparison, and we think most people use an outboard receiver or processor

for Dolby Digital. Thus we care only that the DVD player sends the Dolby

Digital bitstream to the receiver or processor properly, and we're confident

that all of them do. We have concentrated our efforts on where

it matters most: the image delivered on-screen. Our goal is to identify the

DVD players most capable of reproducing an image that is most faithful to

what the film-makers intended. In other words, we are looking for an

‘accurate' image.

If you are looking

for reviews that detail the feature sets of players or even to make you feel

good about your purchase, you might be disappointed with the shootouts. Our

goal is to push each player to its limits. We want to identify its strengths and

weaknesses in hopes that manufacturers will use this information to build

better products that we all can enjoy.

It's worth noting

(again) that we specifically designed our tests to isolate and magnify small

differences between players that we think are important. If you look at the

lowest-rated players and the highest-rated side-by-side playing a high

quality, properly flagged movie (Superbit Fifth Element, anyone?),

the differences will be subtle. This is the way most reviewers evaluate

players, and we think it's a worthless technique for product reviewing.

What makes the

most difference, in our opinion, is documenting when the player screws up. How often

does it screw up? Under what circumstances? What kind of material does it

handle poorly? How bad does it look when it screws up?

We basically think

that's it's reasonable to expect your player to perform well on all

discs, not just most discs.

We have worked

hard to identify as many common problems as possible in terms of what you

will run into with a progressive scan player. With each shootout we have

tried to include new tests based on new problems that have been encountered.

It is our belief that if we don't give the manufacturers something to improve

on, then products will not evolve.

The discs we use

for testing are not problem-specific to that disc, but are specific to the types of

problems that many discs suffer from. They are the problems we test for. All too

often people have associated the problems with the disc we test with. For

example, we use The Big Lebowski to test for Bad Edits. People have

incorrectly assumed that since they don't have Big Lebowski, they don't

care about the problem. We are trying to address this in Shootout 3. We have

renamed all tests to indicate what each test represents, and we simply list the disc we

used to test for that problem. We have also updated Shootouts 1 and 2 to

reflect this.

We chose test

discs based on the fact that everyone has access to them in stores or DVD

rental places. You can use the same discs that

we do when evaluating a DVD player at your local dealer. Most dealers will

let you try your own material.

We simply do not

have enough time or resources to perform the DVD Benchmark in its entirety

on every player.

There has been a lot of feedback about the first two shootouts because it

did not cover the basic video performance. We have decided to take some of

the basic video tests from

Part 1 of the Benchmark and combine

them with the deinterlacing tests from

Part 5. We hope this new information is what you

are looking for, and we are sorry that we can't give you all of it.

Our Style

Most AV reviewers

in general favor a "holistic" approach to reviewing. The idea is that the

best way to evaluate a player is to play with the knobs, check out the

features and output jacks, and then kick off your shoes and watch a few

top-notch DVDs.

Our way is to take

the thing apart, try to figure out (in obsessive detail) how all the pieces

work and how to highlight the ways it differs from other similar units. It's

an engineer's way of doing things.

If you tend to be

fairly laid back in your viewing, and not too worried about a glitch here or

there, or a disc that looks soft, things like that, then the holistic

approach is probably fine.

If you get annoyed

every time your player glitches, or you just are curious about how all this

stuff works, then clearly our obsessive-compulsive reviewing style is right

up your alley. We make no claims to have the perfect reviews - we tell

people what we like, and we tell them exactly why.

Now, as to why the

"other guys" will praise highly a player that we can show has serious

defects, well, that's complicated. In some cases, what we consider a serious

defect is not considered to be very important by some other writers.

In other cases,

their testing is just not as sensitive. That's not a dig - their testing is

not designed to be as sensitive. It's based on the "watch some good movies"

approach, which is by its nature more slapdash. The implication is that it's

important to focus on the forest (mainstream DVDs when everything is going

right) and not the trees (unusual, smaller DVDs and occasional glitches). We

like to dig around in the trees.

We personally

don't like the holistic style of reviewing (surprise, surprise), at least

for DVD players. We are gratified that there are others who feel the same

way. We feel strongly that audio-visual equipment can be evaluated using an

engineering approach because it has specific, measurable criteria for

success. If we were reviewing restaurants, on the other hand, that wouldn't

be true. Restaurants are not as amenable to that approach because there is

no agreement on what constitutes a "perfect" meal. But with video, there is

a holy grail: accuracy.

Would you like

someone to review a computer by just sort of booting it up and playing around with

the mouse, and then writing "I felt like the desktop had a stronger

presence, and my computing tasks took on a greater importance, as though a

veil had been lifted from my monitor"? No! You want people to measure things

like speed and heat dissipation and reliability and features. And what is a

DVD player if not a dedicated movie-playing computer? Some DVD players do

their task of showing a glitch-free accurate image better. They do it on a

wider variety of material, and they do it with fewer problems.

However, all that

said, the main reason we launched into this whole shootout process is that

we're huge movie fans, and we were annoyed by constant glitches distracting

us from enjoying our movies. If we hadn't all been wound so tight, we

probably would have just shrugged it off. Plus, we're all obsessed with

finding out how things really work. However, since our first shootouts were

published, manufacturers are responding, and fixing the problems. This is

very gratifying.

The important

thing is to enjoy the DVDs. If someone has a player that they've been

enjoying, and then read our report and find out that it's not so great by

our standards, does that negate their previous enjoyment? Of course not. And

if they don't see any of the glitches we show, then they don't really have

any reason to change players.

The Truth About

the Flags

At the end of the

day, we believe you should not need a fancy cadence based algorithm to deinterlace film sources. A DVD player should be able to simply use the

flags on the disc to reconstruct the original frame of film. The problem is

DVD authoring is far from perfect, although others may try to convince you

otherwise. We are still waiting for them to provide data to backup their

claims that encoding errors are nonexistent.

In the meantime,

we have collected our own data. We have analyzed the content on lots of

DVDs, including some of the most recent big name titles. (We have been doing

this for over 2 years now.) From what we can

tell with our data, DVD authoring is not improving. It seems to be staying

constant, which makes sense to us because companies are trying to save money

and one way to do that is to not purchase the latest authoring software or

encoders every month.

Here is a small

sample of DVD titles we have tested. We have included some big blockbusters as well as TV

show content. We have listed the number of times the MPEG encoding has

changed from 3-2 to video / 3-3 / 2-2. We have also listed if the chapter

breaks on the disc have caused an unnecessary drop to video.

|

DVD (Movie Title) |

# of Deviations from 3-2 |

Error on Chapter Break |

| Amélie |

100 |

Yes |

| Brotherhood of the Wolf |

128 |

No |

| Felicity |

All Video |

All Video |

| The Fifth Element (SB) |

126 |

Yes |

| Friends |

All Video |

All Video |

| Glenngarry Glennross |

86 |

No |

| Halloween Resurrection |

81 |

Yes |

| Ice Age |

48 |

No |

| Lilo and Stitch |

55 |

No |

| LOTR |

170 |

Yes |

| LOTR Extended Ed. |

167 |

No |

| MIB 2 |

120 |

Yes |

| Monsters Inc |

Alternating PF flag |

No |

| Once and Again |

All Video |

All Video |

| Pulp Fiction: SE |

345 |

Yes |

| Reservoir Dogs: SE |

89 |

No |

| Spider-Man |

136 |

Yes |

| Star Wars Episode 2 |

172 |

No |

Match Your

Socks, Not Your Electronics

Something we have

always found unusual and it is not limited to DVD players or even consumer

electronics, is brand loyalty. Just because you own a "Brand X" TV does

not mean you need a "Brand X" DVD player. Chances are the people who designed and

built the TV have no interaction with those who designed and built the DVD

player. They might not even be at the same facility or even in the same city.

There is also a good chance that they never tested on their own products.

When you buy a DVD

player, you should ignore the brand name. First, you need to decide what is most

important to you, absolute performance or features. Next figure out what the

maximum amount you want to spend and start from there.

There are very few

DVD player manufacturers who actually design their players from the ground

up. In the main stream we know that Denon, Meridian, Panasonic, Pioneer, Sony, and Toshiba do

build many of their DVD products in house. This is not an exhaustive list it is only a

small sample. Most others will OEM a kit and start from there. In fact, some

companies will OEM kits from multiple manufactures to build products at

different price points.

As of this

writing, there is only one universal player that does not suffer from the

Chroma Upsampling Error (CUE). If you want a universal player for the sake of

convenience, we fully understand that. Just don't expect the universal

players to do everything great.

Lastly, buying one

brand of products to be able to use a common remote control is a futile

exercise! So called "Universal" remotes will only do the basic functions, so

you still need the dedicated remote that came with the product.

A Sheep in

Wolf's Clothing

A while back Jim

Doolittle found a great example of why film mode deinteralcing (3-2 pulldown

detection) is so important. Up until then, the best example was a test

pattern, but too many people like to dismiss test patterns. We believe that

the reason people dismiss video test patterns is because they are coming

over from the audio side that is filled with lot a lot of voodoo and black

magic. Video test

patterns are extremely valuable and are used throughout the professional video

world.

The example that

Jim came up with is the opening of Star Trek: Insurrection. This is a long

shot that pans left to right and then back again over a small village. It

contains several buildings with fine detail and angles. If you do not have

film mode, the angles show up as jagged instead of smooth diagonals. The

problem now is that people have come to believe this is some form of torture

test, which is simply not true.

We looked at the

flags and they are just fine during the opening scene. There is also a

strong 3-2 pulldown signature, which means any algorithm with 3-2 pulldown

detection will have no problems deinterlacing this scene maintaining full

vertical resolution with smooth diagonals.

This scene is only

useful to demonstrate to someone why they want film mode detection. It

offers nothing when comparing two different progressive scan players that

have film mode detection.

Ok, we lied. There

is actually a second use for that scene. Because it is a long pan, it is

good to demonstrate judder caused by 3-2 pulldown. People love their HTPCs

because they can remove this judder.

Another popular

scene is the opening of The Fifth Element with the steps leading into the

pyramid. It too is not a torture test of any kind and is just another good

example of why film mode detection is so important.

Pressing On

As we have stated

in Part 5, the only real way to evaluate a progressive scan player is to

identify problem material. If there are no problems they will all perform

the same in regards to constructing a progressive image. It is when the

problems arise that the differences between players will become known.

Everyone involved

with the progressive scan shootout here at Secrets is a film lover. When we

sit down to watch a movie, we don't want to have to worry about what

deinterlacing mode to put the player into, we simply want to be engaged by

the story. We want to forget we are watching a DVD in our living room. There

is nothing worse than while being immersed in the story that something

happens on screen that should not have happened. When this happens you are

reminded that you are at home watching video. It is like someone throwing

cold water in your face.

We want a

progressive scan player that is looking out for us. It is protecting us from

all the unnecessary artifacts out there. With a great progressive scan

player we can once again sit back, relax, and enjoy the film.

Evaluation

Equipment and Process

We looked at all

of the progressive DVD players using a Sony VW10HT LCD projector, which was

selected because of its ability to handle a wide variety of different signal

types and its very high resolution display. The projector's one major

weakness is a lack of a very dark black level, but that didn't affect the

tests we were making. We used the custom-made Canare LV-61S cables for most

players, and a BetterCables Silver Serpent VGA to 5 RCA cable for the PC DVD

players and the iScan Pro.

Players were

scored on a sheet that had a pass/fail designation for each of the tests,

plus areas for entering numerical scores for certain tests, and notes for

anything unusual or interesting. All tests were witnessed by at least two

people. For looking at the chroma separate from the luma, we used an iScan

Pro, because it produces H and V sync signals even with Y'P'bP'r output, and

the 10HT is able to sync to external H and V sync even in Component mode, so

we were able to remove the Luma cable and still have a stable picture.

A Tektronix

TDS3054 with the video option was used to measure the progressive outputs on

each DVD player along with Avia and Video Essentials. We use the built-in

cursors and math functions to measure the various video levels.

Notes on the

Tests (How we Score)

We reworked the

way we presented our tests for shootout 3, in a way that we hope will make

it easier to see exactly what a particular player does or doesn't do well.

Our old system graded players on whether they could handle a particular

scene or disc properly, but people told us they weren't always sure what a

player passing or failing a disc meant. Some people thought that if they

didn't like Titanic, they didn't need to pay attention to whether a player

passed the Titanic test. What was missing was a clear statement in the chart

of the specific deinterlacing problem that was being tested for.

We are still using

most of the same discs we used for shootouts 1 and 2, but we've broken the

results down by deinterlacing issue. Some issues are covered by multiple

discs, and some discs give results for multiple issues. But we think it will

be easier to tell exactly why a player did or didn't do well.

In addition, we've

given all the tests weights, based on how visible or distracting a

particular problem is and also on how common that problem is. If a problem

is not very distracting, and will only be seen on 0.1% of discs, we weighted

it low. Problems that are visible on many discs, and cause significant,

visible problems we weighted high. Weightings are from 1 to 10.

Most tests either

pass or fail. A few have gradations of failure. If a test didn't pass, but

wasn't as bad as the worst players, we in some cases, gave partial credit.

Basically, each player starts with 0 points. For each test, a weighted test

score is calculated, as follows:

First, the raw

test score is calculated from 0 to 10. Either from pass / fail or table.

(Listed with each test) Second, the weighted test score is calculated by

multiplying the weighting of the test by the score and dividing by 10.

In equation form:

weighted_test_score = raw_test_score * test_weight / 10

All of the

weighted test scores are added up for all the tests to form the final

weighted score. Tests that have no data are counted as zero. In addition

the total maximum score the player could have gotten (all the weights for

all the tests that the player completed added together) is calculated.

The final score is

expressed as a percentage of the maximum score for that player. In equation

form:

Final_score =

final_weighted_score / max_weighted_score * 100

So in other words,

if the maximum score for a player, given the tests actually applied, is 150,

but that player actually scored 75, the final score is 75 / 150 * 100 = 50.

A different player, that had a slightly different set of tests, could have

scored 200, but only scored 75. That player's score is 75 / 200 * 100 =

37.5, which is rounded to 38. All of the intermediate scores should be kept

as floating point numbers, but the final score should be rounded to the

nearest integer.

So any player that

passes every test we gave it gets 100, and every player that fails every

test we gave it gets 0.

The upside:

-

All players scores

can be reasonably compared, even though they had slightly different tests.

-

The scores always

remain in a reasonable range.

-

The weightings are

still very much important; high weighted tests affect the outcome much more

than low-weighted tests.

The downside:

-

A player doesn't

get any real credit for passing a harder set of tests.

-

You can get

situations in which two players have essentially the same performance, but

one gets a slightly higher score because it had one or two extra tests,

which it passed.

We believe that the two

downsides are not that bad. Looking at our data, each player was given a

very comparable set of tests, and the differences in scoring are not going

to amount to more than 1 or 2 points, which we've said in the notes is not a

significant difference.

We have broken out

the shootout into two sections Deinterlacing and Core. Deinterlacing will

contain all of the progressive scan specific tests. We have grouped the

deinterlacing tests based on what it is they are testing. Core will contain

the chroma upsampling tests, video measurements, and some performance

results.

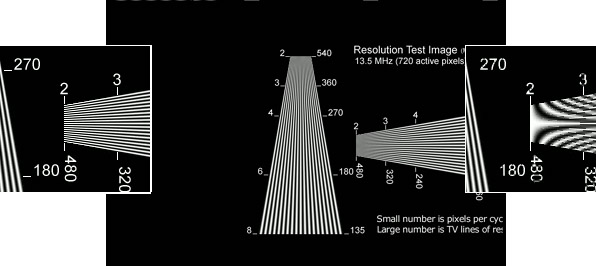

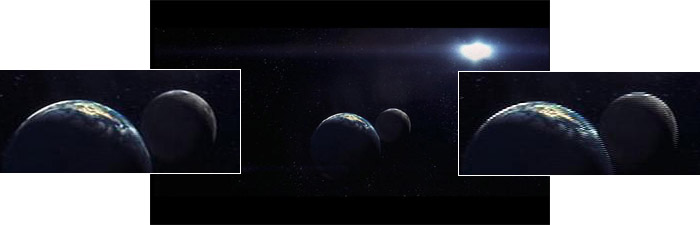

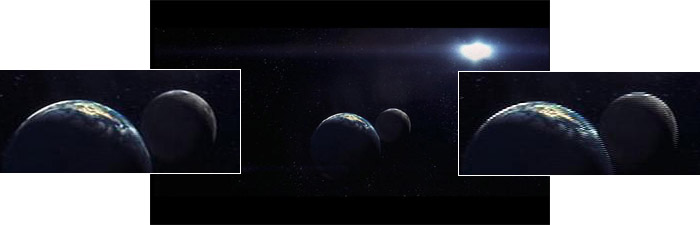

In the sample pics

below, the detailed inset pictures on the left are correct and the ones on

the right are incorrect.

The tests

are listed here in the order we will present them to you in the test

results:

Deinterlacing

| Test Name: |

3-2 Cadence, Film Flags |

| What it Measures: |

Can the deinterlacer go into film mode

and stay there, with standard NTSC film-sourced material. |

| Pass/Fail Criteria: |

Deinterlacer must go into film mode and stay there for

the duration of the test (about 45 seconds). |

| Disc(s)/Chapters used: |

WHQL, Film Alternate 1 |

| Weight: |

10 |

| Notes: |

This is a fundamental deinterlacing task. Any

deinterlacer that can't handle this doesn't really have a working film

mode. To get to this test on WHQL:

- From the Main Menu, Choose Video Port

Performance

- From the Video Port Performance menu,

choose Film

- From the Film menu, choose Basic

|

|

|

|

|

| Test Name: |

3-2 Cadence, Alt. Flags |

| What it Measures: |

Can the deinterlacer go into film mode and stay there,

with NTSC film-source material that was authored using an encoder that

turns the progressive flag off every other frame. |

| Pass/Fail Criteria: |

Deinterlacer must go into film mode and stay there for

the duration of the test (about 45 seconds). |

| Disc(s)/Chapters used: |

WHQL, Film Alternate 2 |

| Weight: |

8 |

| Notes: |

This is just as fundamental as the previous test, but

this material isn't as common. However, given the prominence of some

of the titles that have this problem, we felt it was important to

weight it fairly high. We removed this test from shootout #2, because

in shootout 1, every player passed that passed the standard film test

also passed this one. However, in doing the preliminary testing for

shootout #3, we found two players that failed it. Clearly, we need to

keep this around for the time being. To get to

this test on WHQL:

- From the Main Menu, Choose Video Port

Performance

- From the Video Port Performance menu,

choose Film

- From the Film menu, choose Alternate

|

|

|

|

| Test Name: |

3-2 Cadence, Video Flags |

| What it Measures: |

Can the deinterlacer go into film mode and stay there,

with NTSC film-source material that was authored without any inverse

telecine, and with no frame marked progressive (i.e., flagged as video). |

| Pass/Fail Criteria: |

Deinterlacer must go into film mode and stay there for

the duration of the test (about 45 seconds). |

| Disc(s)/Chapters used: |

Galaxy Quest, trailer; More Tales of the

City |

| Weight: |

7 |

| Notes: |

This is not as common on major Hollywood movies.

However, the results of failure are very visible, and handling film

mode is fundamental to what a progressive DVD player is supposed to

do. |

|

|

|

| Test Name: |

3-2 Cadence, Mixed Flags |

| What it Measures: |

Can the deinterlacer go into film mode and stay there,

with NTSC film-source material which is changing back and forth from

standard film-style flags to video-style flags. |

| Pass/Fail Criteria: |

Deinterlacer must go into film mode and stay there for

the duration of the test (about 45 seconds). |

| Disc(s)/Chapters used: |

WHQL, Chapter stops 1 & 2 |

| Weight: |

6 |

| Notes: |

This particular flaw is extremely common, even on

major Hollywood releases. However, it tends to happen in short bursts,

especially right around the chapter stops, so it's not as bad as

staying in video mode for the whole movie. To

get to this test on WHQL:

- From the Main Menu, Choose Video Port

Performance

- From the Video Port Performance menu,

choose Variations

- From the Variations menu, choose

Chapter Breaks

- From the Chapter Breaks menu, choose

Alternate 1.

After Alternate 1 has completed, run

Alternate 2. |

|

|

|

|

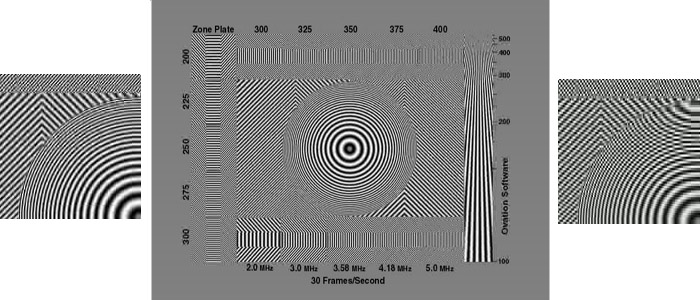

| Test Name: |

2-2 Cadence, Film Flags |

| What it Measures: |

Can the deinterlacer go into film mode and stay there,

with 30 fps progressive material with standard progressive flags.

Pass/Fail criteria: Deinterlacer must go into film mode and stay there

for the duration of the test (about 45 seconds). |

| Pass/Fail Criteria: |

A player should go into film mode on

this test. In the case of Natural Splendors, you should not see any

visible jaggies. In the case of Avia, there should be no moiré on the

zone plate. Avia is a better disc to use. We only provided Natural

Splendors so you can see what happens on real material. Some players

are more severe than others. |

| Disc(s)/Chapters used: |

Natural Splendors, chapter 6; Avia, Zone plate |

| Weight: |

5 |

| Notes: |

2-2 pulldown isn't that common in the US. In Europe,

this test would be weighted 10, because almost all PAL film-sourced

discs are flagged this way. We made an interesting discovery with the

Avia pattern below. It is actually encoded as a progressive_sequence,

which is not allowed. If you try to force it into video mode on a PC,

it will still weave it together correctly with a slight loss of

resolution, which suggest that PC software players actually look at

this flag. None of the flag reading DVD players outside of the PC

recognized this flag. |

|

|

|

| Test Name: |

Film Mode, High Detail |

| What it Measures: |

What it measures: Can the player go into film mode and

stay there, with material with high spatial frequency and detail. |

| Pass/Fail Criteria: |

Player must stay in film mode for the duration of the

test (about 10 seconds) |

| Disc(s)/Chapters used: |

Super Speedway, chapter 7, where the car goes by the

stands. |

| Weight: |

6 |

| Notes: |

This is a test that some deinterlacers ordinarily

having good film mode fail, probably because the high frequency detail

in the vertical direction looks like combing, and it drops into video

mode to avoid artifacts. We think this is a flaw, when the material

otherwise has a strong 3-2 pulldown signature. Surprisingly, this

material is relatively common. If a player fails this test, it's

likely that it drops to video mode much more often than necessary. |

|

|

|

|

| Test Name: |

Bad Edit |

| What it Measures: |

Whether the player can handle hiccups in the 3-2

cadence without combing. |

| Pass/Fail Criteria: |

|

Result |

Criteria |

Points |

| Pass |

0 Combs |

10 |

| Fail |

1 Comb |

3 |

| Fail+ |

2+ Comb |

0 |

|

| Disc(s)/Chapters used: |

Big Lebowski, Making-of documentary |

| Weight: |

10 |

| Notes: |

This is a huge, huge issue, and the thing that really

separates the best deinterlacers from the worst. Combing is one of the

most visible artifacts, and avoiding it on material like this is

difficult. We feel strongly that players that can't pass this test are

not worth considering, given that there are inexpensive players that

do pass it. |

|

|

| Test Name: |

Video-to-Film Transition |

| What it Measures: |

Can the player detect a transition from film to video,

and avoid combing. |

| Pass/Fail Criteria: |

Player must not comb during the test duration (about

30 seconds) |

| Disc(s)/Chapters used: |

WHQL, Mixed Mode |

| Weight: |

6 |

| Notes: |

It's relatively common for lesser deinterlacers to

comb when the film cadence abruptly changes to a video cadence. This

is not a common situation in most movies, but it's very common in film

behind-the-scenes documentaries such as are found on special-edition

DVDs. When the 60fps logo goes away, pay

attention to the transition. Does it comb on this transition? You will

have to watch the entire sequence, which is about 1 minute long. It

may not comb on all. If it combs only just 1, it is a fail.

To get to this test on WHQL:

- From the Main Menu, Choose Video Port

Performance

- From the Video Port Performance menu,

choose Variations

- From the Variations menu, choose Mixed

Mode

- From the Mixed Mode menu, choose Basic

Y

|

|

|

|

|

| Test Name: |

Recovery Time |

| What it Measures: |

Can the player re-enter film mode in a reasonable

amount of time after leaving video mode. |

| Pass/Fail Criteria: |

Time to re-enter film mode after being

in video mode:

|

Result |

Criteria |

Points |

| Pass (Excellent) |

Within 5 frames |

10 |

| Borderline (OK) |

6 to 15 frames |

5 |

| Fail (Poor) |

>15 frames |

0 |

|

| Disc(s)/Chapters used: |

WHQL, Mixed Mode |

| Weight: |

6 |

| Notes: |

The best deinterlacers return to film mode very

quickly. If they stay in video mode too long, the chances of annoying

deinterlacing artifacts appearing gets larger. |

|

|

| Test Name: |

Incorrect Progressive Flag |

| What it Measures: |

Can the player recognize that the progressive flag on

the disc is not correct, and avoid combing. |

| Pass/Fail Criteria: |

Player must not comb during the test duration (about

10 seconds for each disc) |

| Disc(s)/Chapters used: |

Galaxy Quest, main menu intro; Apollo 13, making-of

documentary |

| Weight: |

6 |

| Notes: |

Players that always trust the flags will fail this

test, because in this case, the flags are completely wrong. Material

with the progressive flag set when it shouldn't be is relatively rare,

but failure on this test is about as visible as it is possible to be. |

|

|

| Test Name: |

Per-Pixel

Motion-Adaptive |

| What it Measures: |

Are the video deinterlacing algorithms per-pixel

motion adaptive. |

| Pass/Fail Criteria: |

The two parts of the test pattern had to be displayed

solidly on screen without flickering, for the duration of the test. |

| Disc(s)/Chapters used: |

Video Essentials, Zone Plate; Faroudja test disc,

pendulum test. |

| Weight: |

10 |

| Notes: |

This is the baseline of quality for video

deinterlacing. Anything less is a compromise. The example below is a

simulation. You will notice that the 'O' and 'K' are alternating. On

the real pattern, this is happening so fast that it looks like they

are just flickering but in reality, they are alternating like the

animation. |

|

|

Core

| Test Name: |

Sync Subtitle to Frames |

| What it Measures: |

Does the MPEG decoder synchronize the

subtitles with the film frames, avoiding combing in the subtitles. |

| Pass/Fail Criteria: |

There had to be no combing in the

subtitles for the duration of the test (about 30 seconds) |

| Disc(s)/Chapters used: |

Abyss, chapter 4 |

| Weight: |

2 |

| Notes: |

This is an annoyance if you watch a lot

of subtitled movies. You may or may not see a comb on the particular

text below. This was created by hand to illustrate what the artifact

looks like. The comb happens very fast, but we have slowed it down here so

you can see it. The comb only occurs as the subtitle comes in or goes

out. It is never there while the text is just sitting on screen. |

|

|

|

|

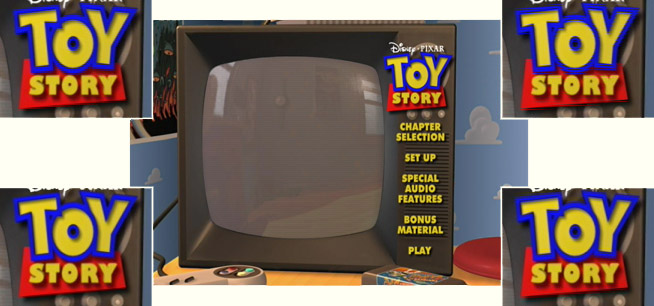

| Test Name: |

Chroma, 3-2 Film Flags |

| What it Measures: |

Does the player not have the Chroma

Upsampling Error on standard film

material. |

| Pass/Fail Criteria: |

MPEG decoder must use a progressive chroma upsampling

algorithm to pass. If it doesn't, but the player uses a deinterlacer that

hides the chroma bug, we gave partial credit.

|

Result |

Criteria |

Points |

| Pass |

No CUE |

10 |

| Borderline |

Masked CUE |

5 |

| Fail |

CUE |

0 |

|

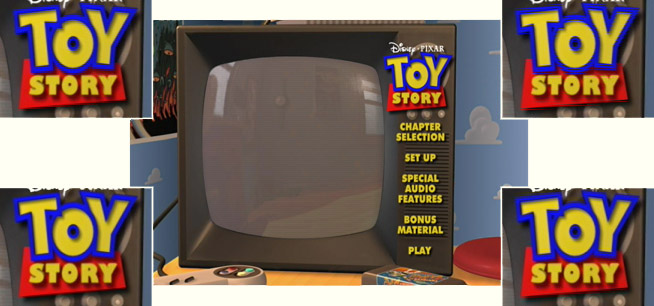

| Disc(s)/Chapters used: |

Toy Story, chapter 4 |

| Weight: |

10 |

| Notes: |

The chroma bug is visible on a huge variety of major Hollywood

releases. Once you start seeing it, it's a huge distraction. We almost wish

the weighting went to 11. |

|

|

| Test Name: |

Chroma, 3-2 Alt. Flags |

| What it Measures: |

Does the player not have the Chroma

Upsampling Error on material that

has the alternating progressive flag encoding problem. |

| Pass/Fail Criteria: |

MPEG decoder must use a progressive chroma upsampling

algorithm to pass. If it doesn't, but the player uses a deinterlacer that

hides the chroma bug, we gave partial credit.

|

Result |

Criteria |

Points |

| Pass |

No CUE |

10 |

| Borderline |

Masked CUE |

5 |

| Fail |

CUE |

0 |

|

| Disc(s)/Chapters used: |

Monsters, Inc., Chapter 6 |

| Weight: |

8 |

| Notes: |

As with film mode, we only weight this

lower because it's not as common as the "standard" flags. |

|

|

|

| Test Name: |

Chroma, 2-2 Film Flags |

| What it Measures: |

Does the player not have the Chroma

Upsampling Error on material that

is encoded 2-2 progressive. |

| Pass/Fail Criteria: |

MPEG decoder must use a progressive chroma upsampling

algorithm to pass. If it doesn't, but the player uses a deinterlacer that

hides the chroma bug, we gave partial credit.

|

Result |

Criteria |

Points |

| Pass |

No CUE |

10 |

| Borderline |

Masked CUE |

5 |

| Fail |

CUE |

0 |

|

| Disc(s)/Chapters used: |

Toy Story (from the 3-disc Ultimate

Edition), main menu |

| Weight: |

8 |

| Notes: |

As with film mode, we only weight this

lower because it's not as common as the "standard" flags. |

|

|

|

| Test Name: |

4:2:0 Interlaced Chroma

Problem |

| What it Measures: |

Does the player have a deinterlacer or postprocessor that

hides the chroma artifacts in 4:2:0 interlaced material. |

| Pass/Fail Criteria: |

Player must mask chroma errors in interlaced material. |

| Disc(s)/Chapters used: |

More Tales of the City, Chapter 4 |

| Weight: |

5 |

| Notes: |

This is in some ways expecting too much

from current players, given that the methods for mitigating this

problem have only just become known. But we thought it was a useful

criterion. Next shootout, the weight will probably be higher. |

|

|

| Test Name: |

Video Levels (Black and White) |

| What it Measures: |

Does the player have correct voltage

outputs for black and white level. |

| Pass/Fail Criteria: |

To pass, a player has to have a black

level of 0 IRE (in the darker of its black level modes, if it has

two), and must output nominal white at 100 IRE +/- 2. |

| Disc(s)/Chapters used: |

Avia, Horizontal Gray Ramp (Title 1,

Chapter 103) |

| Weight: |

8 |

| Notes: |

If a player doesn't output standard

levels, consumers don't get good results out of the box, and it may be

impossible to get a calibration that works properly with all the other

components connected to the display. Below is

a photo from one of the DVD players, as it appears on our test scope.

In this particular case, it is the horizontal gray ramp

found on Avia.

For the

video levels, we measured black and white. According to EIA770, 480p

should have black at 0 IRE and white at 100 IRE. (Actually it is in

mV, but we prefer to report in IRE because we believe it is easier to

understand.)

A consumer display must be capable of supporting

multiple TV standards, which include SD, ED, and HD. For SD sources in

the US, black is at 7.5 IRE. (Also called Setup or Pedestal) For the

rest of the world, it is at 0 IRE. For ED and HD, black is at 0 IRE.

Some DVD players allow you to turn on Setup (Set

black at 7.5 IRE.) for ED sources. This makes since when your display

device only has one video memory and you don't want to have to

manually change the brightness setting as you switch between say cable

TV and your DVD player.

The problem we found is that no two DVD players call

this feature by the same name. If you look at the animated image below

you will see the same feature called by different names. Meridian and

Pioneer call it what it is and let you select 0 IRE or 7.5 IRE. The

others are a bit more cryptic. It gets even worse if you read the

instruction manual and they tell you when you should adjust it.

We have found some displays and video processors

that will clip all information above 102 IRE. This is why we chose the

+/- 2 IRE. Some DVD players allow you to adjust contrast to set the

white level, but we are basing our test off of the default setting. We

expect a manufacturer to do the right thing on the default memory. We

found many errors on players, which suggest that manufactures are

purposely changing the levels to make their player look different.

Another reason for the level differences may be the

tolerance of the parts used. In this case, a manufacture should be

hand tweaking the players using test instrumentation as it comes off

the production line. This is probably to much to ask for a $200 DVD

player, but anything over $1000, especially those with a THX logo, it

is expected.

We had planned to use the picture controls built

into each player and tell you which settings provide the most accurate

image, but this became time consuming and we ran into problems where

the 480i and 480p outputs were not active at the same time, which made

it difficult to adjust the setting and view the output on our scope.

The good news is that, in most cases, you can erase

these differences by simply using a disc with test patterns to

properly set contrast, brightness, and color. Once you have done this,

all players will produce the black level and color.

Another option, which is needed on some DVD players,

is an outboard proc amp. They usually allow you to properly set all

video levels before going into a display.

Because of black being at 0 or 7.5 IRE, you need to

be extra careful when comparing two different players or even the

interlaced vs. progressive output on the same player. If your display

does not offer more than one memory, you will need to adjust your

player each time you switch between players.

One of the players in shootout 3 has white at 106

IRE. If you compare this to another player that has white at 100 IRE

you will have to either turn down contrast when you select the 106 IRE

player or turn up contrast when you select the 100 IRE player to

ensure both images are scaled properly. As a side note, if you plan to

use the 106 IRE DVD player on a digital display, you will need a proc

amp or you will not get the best possible image.

Our point is that, if you see a difference in black

level or color between players, it is operator error, because those

differences don't exist when the voltage levels are the same. |

|

|

| Test Name: |

Blacker-than-Black |

| What it Measures: |

Does the player have the ability to

reproduce the picture content below black. |

| Pass/Fail Criteria: |

To pass, a player has to pass the below

black on the PLUGE pattern. |

| Disc(s)/Chapters used: |

Video Essentials, PLUGE pattern |

| Weight: |

7 |

| Notes: |

We tested each player for the ability to pass blacker-than-black and whiter-than-white. All of the players had no problem with the whiter-than-white, so

you will not see those results in the data. Below is

a photo from one of the DVD players, as it appears on our test

instrument. The ramp goes from 4% below black

to 4% above white. The spike on the left is a marker at level 16, and

the spike on the right is a marker for level 235. This pattern will be

on Digital Video Essentials. It is available now on the DVD that comes

with the book DVD Demystified 2nd Edition.

The specification CCIR 601, which is the digital representation of the

analog NTSC system, specifies the range of the luminance signal from levels 1

through 254 (Levels 0 and 255 are reserved.) with nominal 'black' at level 16

and nominal 'white' at level 235.

The range of levels from 16 - 235 is where the bulk of the picture information

is placed. The extra 34 levels are there for head and room toe room. Real

world images contain scattered blacks (Levels 1-15) and whites (Levels 236-254).

Along with these highlights and lowlights there are valid production

reasons, which include the PLUGE test signal used to properly setup a

display as well as keying.

We believe that a digital representation of a 'natural' image requires a

signal range beyond the nominal 'black' and 'white' points to look

'natural'.

We like to use The Talented Mr. Ripley (Joe Kane pointed out the Talented

Mr. Ripley a couple of years back) to demonstrate why BTB is needed. If

below black is clipped, then the shadow detail will appear as blocky

or pasty looking. This is similar to what happens when you clip white,

but the pasty look is then located in the highlight areas.

Above is a single frame taken from Mr. Ripley, and below is a table of

levels 1-15 and how many samples on screen were set to that value. This only

represents a very small number of levels in the frame, but we never said that

a lot of information existed below black. Most information should reside

from 16 - 235.

|

Level |

Count |

| 1 |

0 |

| 2 |

0 |

| 3 |

0 |

| 4 |

0 |

| 5 |

1 |

| 6 |

2 |

| 7 |

7 |

| 8 |

6 |

| 9 |

16 |

| 10 |

43 |

| 11 |

117 |

| 12 |

241 |

| 13 |

185 |

| 14 |

155 |

| 15 |

139 |

A big thanks goes to Ron Economos for analyzing this disc and dumping the

bits used. |

|

| Test Name: |

YC Delay |

| What it Measures: |

Does the player have interchannel delay between the luma

and chroma channels that meets the EIA 770 spec for consumer-grade video. |

| Pass/Fail Criteria: |

To pass, a player has to have less than

or equal to 5 nanoseconds delay between Y-Pb and Y-Pr. |

| Disc(s)/Chapters used: |

Video Essentials; Bowtie (Title 18, Chapter 15) |

| Weight: |

10 |

| Notes: |

When a player doesn't get this right,

the video is continuously messed up. It's one of the core

specifications of quality video. It is important that Y, Pb, and Pr all arrive at the same time to create an

accurate image with clean, sharp, edges. In most cases the component timing

is perfect up until the point it passes through the video DAC. Most errors

are introduced between the DAC and the output connectors.

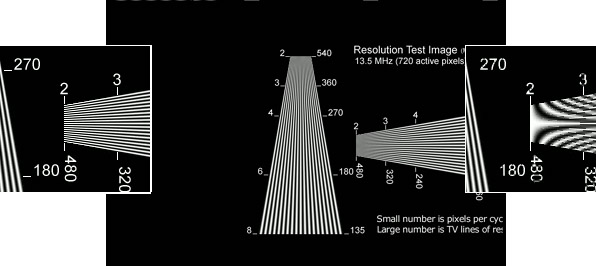

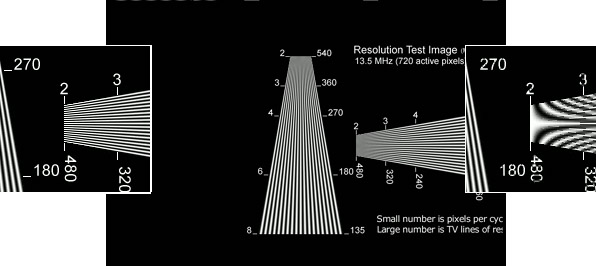

Below is the YC pattern found on Video Essentials.

If you look at the image on the far right, you will see that the left edge

of the red stripe is smeared, while the right edge is dark. Compare

that image with the one on the far left, where there is no smearing. The delay

can be in either direction, so the smear may be on the left or right,

and in some cases both sides.

|

|

|

| |

The specification EIA770 tells us what the voltage levels should be for SD

(Standard definition or 480i), ED (Enhanced definition or 480p), and HD

(High definition or 720p and 1080i). This specification also sets the

guideline for the amount of delay allowed between channels.

Below is a photo take from two different DVD

players, as it appears on our test instrument. The graphic toggles between good YC timing and bad YC timing. The

good timing is the one that looks like a bowtie.

EIA 770 states:

Less than 5ns is considered consumer grade.

Less than 2ns is considered studio grade.

We measure the timing between Y – Pb and Y – Pr. Most of the time when there

is a delay between Y and the two color channels, it happens in the same

direction. In some rare cases, the delay is in the opposite directions. This

generates a delay between Pb and Pr.

For example, if 'Y – Pb' is +5ns and 'Y – Pr' is -5ns, then there is a 10ns delay

between Pb and Pr.

We continue to use the 5ns Bowtie test pattern on Video Essentials.

A single pixel is 74ns at 480i and 37ns at 480p.

Smearing begins to become visible along edge transitions at 10ns with

480i and 5ns with 480p.

|

|

| Test Name: |

Image Cropping |

| What it Measures: |

Does the player crop 0 lines from the

top and bottom, and only a few from the left and right of the image,

and is the image roughly centered. |

| Pass/Fail Criteria: |

|

Result |

Criteria |

Points |

| Pass (Excellent) |

< 5 L + R; < 3

Δ L - R; 0 T + B |

10 |

| Borderline (OK) |

< 10 L + R; < 4

Δ L - R; < 3 T + B |

5 |

| Fail (Poor) |

All else |

0 |

|

| Disc(s)/Chapters used: |

Avia, Pixel Cropping pattern

(Title 5, Chapter 89) |

| Weight: |

4 |

| Notes: |

If the samples / lines are cropped, that's image

information you can't see. There is no good reason to crop information

off the top and bottom, and if there are samples cropped off the sides,

they should be evenly taken from both sides.

We will add that many consumer displays do have overscan, which will

be greater than the amount of image cropping from a DVD player. This

is no excuse for cropping the image.

The sampling rate of luma (the black and white, brightness, portion of the

signal) is 13.5 MHz, which provides 720 active samples per line. The keyword

is samples, not pixels.

When you see the active resolution written as 720 x 480, this implies 720

samples by 480 lines.

What is the difference between samples and pixels? It is a subtle, but

important, difference.

Pixels have hard edges and occupy a fixed, rectangular space. The waveform

generated to represent pixels has theoretically infinite bandwidth, and is

as square as possible, with very quick transitions at pixel edges.

Samples are instantaneous snapshots of an analog waveform. They are a way of

representing an analog signal digitally. The output of a sampling system is

strictly bandwidth limited, and has rounded curves with no edges at all.

We will refer to the missing information from the left and right as cropped

samples, and those from the top and bottom as cropped lines (a line of

samples, which is equal 720 samples per line). In

the NTSC system, it takes 52.65 µs (microseconds) to draw a scan line.

It just so happens that it takes approximately 0.07407 µs to draw a

single sample. If you multiply 0.07407 µs by 720 samples you end up

with 53.3304 µs, which is 0.6804 µs more than the time it takes to

draw a scan line. If you divide 0.6804 µs by 0.07407 µs, you end up

with 9.19 samples less than 720 samples, or approximately 711 samples.

|

|

|

| Test Name: |

Layer Change |

| What it Measures: |

Amount of time it takes to switch from

one layer to another. |

| Pass/Fail Criteria: |

Does the player move between disc

layers quickly.

|

Result |

Criteria |

Points |

| Pass (Excellent) |

< or = 1 Second |

10 |

| Borderline (OK) |

> 1 or < 2 Seconds |

5 |

| Fail (Poor) |

> or = 2 Seconds |

0 |

|

| Disc(s)/Chapters used: |

WHQL, high bit rate title roll. |

| Weight: |

4 |

| Notes: |

This is by design a worst-case layer

change, involving a long seek across the disc. The layer change also

occurs at the maximum bitrate.

However, we find that it correlates well with the speed of all kinds of

layer changes. To get to this test on WHQL:

- From the Main Menu, Choose Decode

- From the Decode menu, choose

High Bit Rate

- From the High Bit Rate menu, choose

Highest

- From the Highest menu, choose Title

Roll

The layer change will occur when you see "Warner

Brothers" reach the center of the screen. |

|

|

| Test Name: |

Responsiveness |

| What it Measures: |

Does the player respond quickly to

remote commands as well as menu navigation and chapter navigation. |

| Pass/Fail Criteria: |

We score on a scale from 1 to 5 with 5

being the fastest.

|

Result |

Criteria |

Points |

| Pass (Excellent) |

> or = 4.5 |

10 |

| Borderline (OK) |

> or = 3, < 4.5 |

5 |

| Fail (Poor) |

< 3 |

0 |

|

| Disc(s)/Chapters used: |

All discs in shootout |

| Weight: |

6 |

| Notes: |

This is a very subjective test, but one we

think is useful. |

|

|

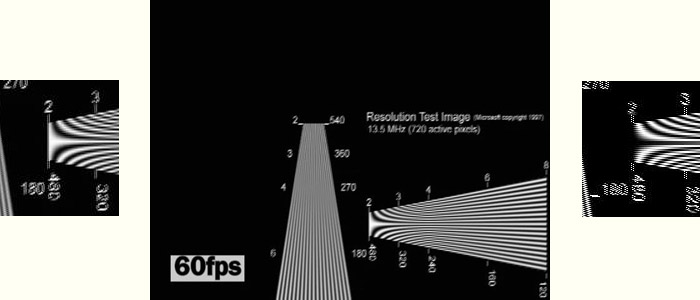

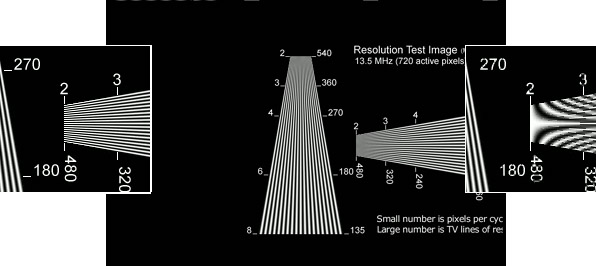

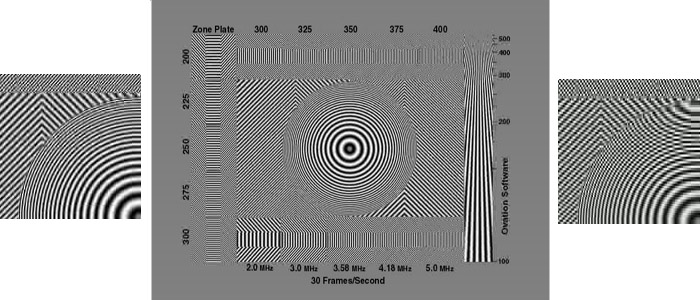

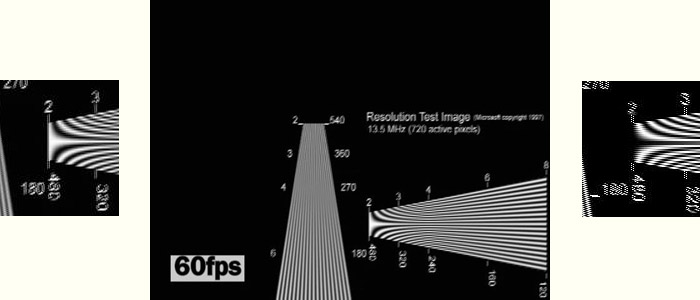

| Test Name: |

Video Frequency Response |

| What it Measures: |

Amplitude of a specific frequency.

(Resolution) |

| Pass/Fail Criteria: |

Graph is green if we think response is

ok. It will appear red if it is down more than -3 dB or above +.5 dB. |

| Disc(s)/Chapters used: |

Avia, Multiburst (Title 3, Chapter 24) |

| Weight: |

Not Weighted. |

| Notes: |

This is the last area in the Core section. We are not going to Score the

player based on the frequency response. We are simply going to show you what

the frequency response of the player under test looks like. Some players

allow you to alter the frequency response with a built-in sharpness control

or through a preset memory. We did all of our tests with the default memory

and all sharpness controls at their default, mid-point, positions.

We started out by measuring Y, Pb, and Pr. We ran into a few players that

had trouble on our test disc that contained the Pb and Pr sweeps. Because of

this, we have stopped measuring Pb and Pr until a production disc comes out

with the patterns that all players can read.

If you would like to know more about this, please refer to the frequency

response section in Part 1 of the DVD Benchmark.

Below is a photo taken from one of the DVD players,

as it appears on our test instrument,

showing the multiburst pattern on Avia.

You will notice that we state the frequency response

out to 10 MHz. You might be wondering how we have a test pattern that goes

that high. It is simple really. At 480p, all frequencies are doubled so

the 5 MHz burst on Avia is really 10 MHz at 480p. We feel that this is

the proper way to report the the frequency response.

We often talk about ringing, but the multiburst (or

sweep) patterns are not really appropriate to use when evaluating this.

In the future, we will use a test pattern found on Sound & Vision Home

Theater Tune-Up that contains three different edge transitions. We

will break it down into Severe, Mild, and Easy tests. This is a bit

more involved and is why it is not currently included.

We also hope to include Phase response (Group Delay)

measurements in future shootouts, and we are just waiting for some long

overdue test discs, which will include the patterns necessary.

|

|

Secrets-Recommended 480i/p

We have created a “Secrets Recommended” award for all players that meet our

criteria. We have broken the award out into 480i and 480p. While a player

may meet our goals at 480p, it does not mean it will meet them at 480i and

vice-versa.

For example, we might award our recommendation for 480p to a player that has

the Chroma Upsampling Error but is masked on the progressive output. This

same player would not be recommended for 480i.

We only looked at the progressive outputs in shootout number 3 so we will

only hand out the award for 480p performance. It is possible that these

players may pass our tests for 480i, but we have not tested them.

| 480p Criteria is as follows: |

|

|

| 1. Must pass all NTSC

3-2 film-mode tests. |

| 2. Must pass bad edit

test. |

| 3. Must have

per-pixel motion-adaptive video deinterlacing. |

| 4. Must get at least

"borderline" for all CUE tests. (does not include ICP) |

| 5. Must pass or

"borderline" all core video basics. |

| 6. Layer change < 2

seconds. |

| 7. Responsiveness >=

3. |

| 480i Criteria is as follows: |

|

| |

| 1. Must pass all CUE

tests. (does not include ICP) |

| 2. Must pass or

"borderline" all core video basics. |

| 3. Layer change < 2

seconds. |

| 4. Responsiveness >=

3. |

Test

Results

HERE

is the link to the Progressive Scan Shootout results. Try out the database,

and let us know if you have any problems, and also, what improvements you

would like to see. Send your suggestions to

[email protected].

- Don Munsil and Stacey Spears -

Last Update: 1/27/03

"Abyss" Image Copyright 1989, Fox

"Apollo 13" Image Copyright 1995, Universal

"Avia" Image Copyright 1999, Ovation Software

"Galaxy Quest"

Image Copyright 1999, Dreamworks

"Monsters, Inc." Image Copyright 2001, Pixar and

Disney

"More Tales of the City" Image Copyright 1998, Showtime Networks Inc. and

DVD International

"Natural Splendors" Image Coryright 2002, DVD International

"Super Speedway" Copyright 1997, IMAX

"Toy Story" Images Copyright 1995, Pixar and Disney

"WHQL DVD Test Annex 2.0"

Images Copyright 1999, Microsoft Corporation

Note: We have heard of some corporations using our articles and graphics to

teach courses to their staff without authorization. Secrets articles and

graphics are original and copyrighted. If you wish to use them in your

business, you MUST obtain permission from us first, which involves a

license. Please contact the editor.

Terms and Conditions of Use

|